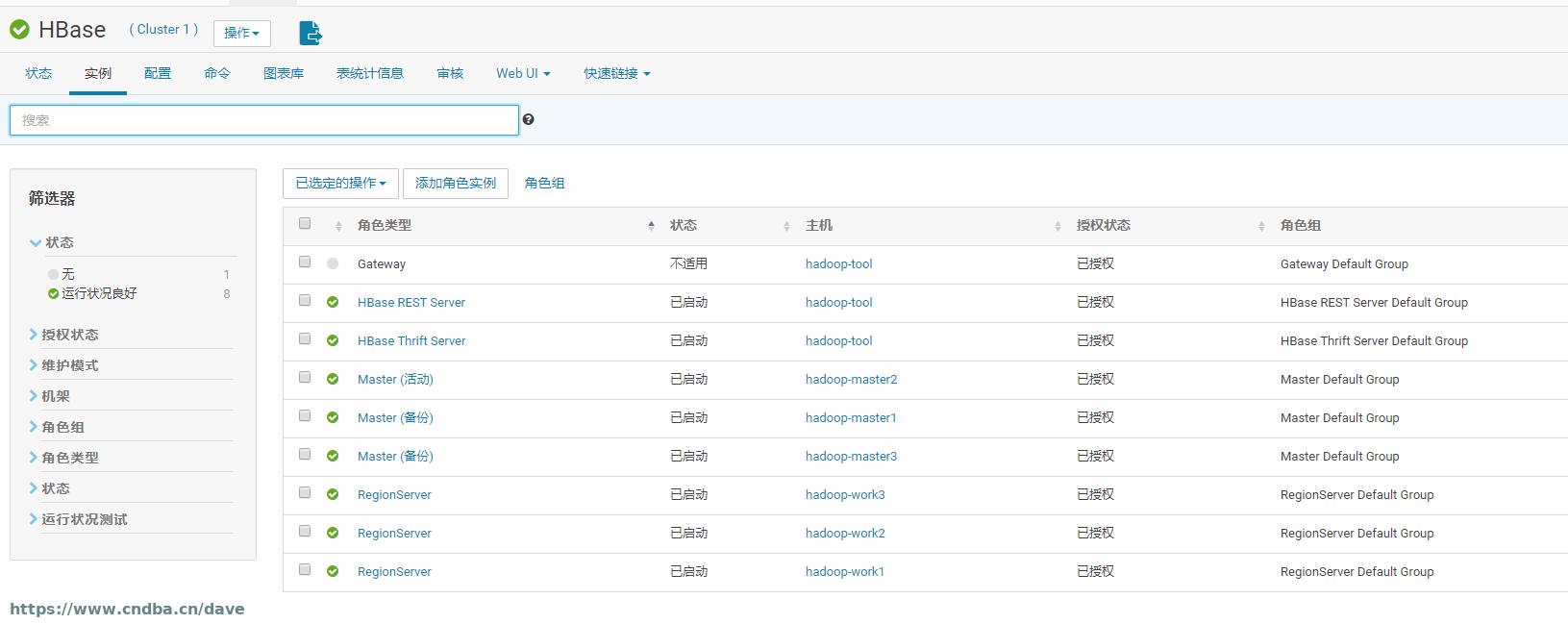

CDH 中,HBase 中的Master 进程一直无法正常启动,查看错误日志如下:

Failed to become active master

org.apache.hadoop.hbase.util.FileSystemVersionException: HBase file layout needs to be upgraded. You have version null and I want version 8. Consult http://hbase.apache.org/book.html for further information about upgrading HBase. Is your hbase.rootdir valid? If so, you may need to run 'hbase hbck -fixVersionFile'.

at org.apache.hadoop.hbase.util.FSUtils.checkVersion(FSUtils.java:710)

at org.apache.hadoop.hbase.master.MasterFileSystem.checkRootDir(MasterFileSystem.java:500)

at org.apache.hadoop.hbase.master.MasterFileSystem.createInitialFileSystemLayout(MasterFileSystem.java:169)

at org.apache.hadoop.hbase.master.MasterFileSystem.<init>(MasterFileSystem.java:144)

at org.apache.hadoop.hbase.master.HMaster.finishActiveMasterInitialization(HMaster.java:721)

at org.apache.hadoop.hbase.master.HMaster.access$500(HMaster.java:197)

at org.apache.hadoop.hbase.master.HMaster$1.run(HMaster.java:1867)

at java.lang.Thread.run(Thread.java:748)

这里提示我们执行hbase hbck -fixVersionFile命令,但直接执行,报如下错误:

[root@hadoop-master1 ~]# hbase hbck -fixVersionFile

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

28/11/03 22:53:32 INFO Configuration.deprecation: fs.default.name is deprecated. Instead, use fs.defaultFS

28/11/03 22:53:32 INFO zookeeper.RecoverableZooKeeper: Process identifier=hbase Fsck connecting to ZooKeeper ensemble=hadoop-master2:2181,hadoop-master3:2181,hadoop-master1:2181

28/11/03 22:53:32 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.5-cdh5.16.1--1, built on 11/22/2018 05:28 GMT

28/11/03 22:53:32 INFO zookeeper.ZooKeeper: Client environment:host.name=hadoop-master1

28/11/03 22:53:32 INFO zookeeper.ZooKeeper: Client environment:java.version=1.8.0_211

28/11/03 22:53:32 INFO zookeeper.ZooKeeper: Client environment:java.vendor=Oracle Corporation

28/11/03 22:53:32 INFO zookeeper.ZooKeeper: Client environment:java.home=/usr/java/jdk1.8.0_211-amd64/jre

……

28/11/03 22:42:00 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=hadoop-master2:2181,hadoop-master3:2181,hadoop-master1:2181 sessionTimeout=60000 watcher=hbase Fsck0x0, quorum=hadoop-master2:2181,hadoop-master3:2181,hadoop-master1:2181, baseZNode=/hbase

HBaseFsck command line options: -fixAssignments

28/11/03 22:42:00 INFO zookeeper.ClientCnxn: Opening socket connection to server hadoop-master1/192.168.20.181:2181. Will not attempt to authenticate using SASL (unknown error)

28/11/03 22:42:00 INFO zookeeper.ClientCnxn: Socket connection established, initiating session, client: /192.168.20.181:33982, server: hadoop-master1/192.168.20.181:2181

28/11/03 22:42:00 INFO zookeeper.ClientCnxn: Session establishment complete on server hadoop-master1/192.168.20.181:2181, sessionid = 0xffb0545652500457, negotiated timeout = 60000

28/11/03 22:42:00 WARN util.HBaseFsck: Got AccessDeniedException when preCheckPermission

org.apache.hadoop.hbase.security.AccessDeniedException: Permission denied: action=WRITE path=hdfs://nameservice1/hbase/.tmp user=root

at org.apache.hadoop.hbase.util.FSUtils.checkAccess(FSUtils.java:2040)

at org.apache.hadoop.hbase.util.HBaseFsck.preCheckPermission(HBaseFsck.java:1990)

at org.apache.hadoop.hbase.util.HBaseFsck.exec(HBaseFsck.java:4952)

at org.apache.hadoop.hbase.util.HBaseFsck$HBaseFsckTool.run(HBaseFsck.java:4780)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:84)

at org.apache.hadoop.hbase.util.HBaseFsck.main(HBaseFsck.java:4768)

Current user root does not have write perms to hdfs://nameservice1/hbase/.tmp. Please rerun hbck as hdfs user hbase

[root@hadoop-master1 ~]#

这里提示权限不错,使用sudo 切换到hbase用户,在执行:

[root@hadoop-master1 ~]# sudo -u hbase hbase hbck -fixVersionFile

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

28/11/03 22:54:32 INFO Configuration.deprecation: fs.default.name is deprecated. Instead, use fs.defaultFS

28/11/03 22:54:32 INFO zookeeper.RecoverableZooKeeper: Process identifier=hbase Fsck connecting to ZooKeeper ensemble=hadoop-master2:2181,hadoop-master3:2181,hadoop-master1:2181

28/11/03 22:54:32 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.5-cdh5.16.1--1, built on 11/22/2018 05:28 GMT

28/11/03 22:54:32 INFO zookeeper.ZooKeeper: Client environment:host.name=hadoop-master1

28/11/03 22:54:32 INFO zookeeper.ZooKeeper: Client environment:java.version=1.8.0_211

28/11/03 22:54:32 INFO zookeeper.ZooKeeper: Client environment:java.vendor=Oracle Corporation

28/11/03 22:54:32 INFO zookeeper.ZooKeeper: Client environment:java.home=/usr/java/jdk1.8.0_211-amd64/jre

…..

28/11/03 23:01:03 INFO client.RpcRetryingCaller: Call exception, tries=27, retries=35, started=390159 ms ago, cancelled=false, msg=

28/11/03 23:01:23 INFO client.RpcRetryingCaller: Call exception, tries=28, retries=35, started=410169 ms ago, cancelled=false, msg=

28/11/03 23:01:43 INFO client.RpcRetryingCaller: Call exception, tries=29, retries=35, started=430296 ms ago, cancelled=false, msg=

28/11/03 23:02:03 INFO client.RpcRetryingCaller: Call exception, tries=30, retries=35, started=450445 ms ago, cancelled=false, msg=

还是会报这种错误,因为之前更换过HDFS的存储盘,担心是这个导致的,所以决定重新安装HBASE。

先删除了/base目录:

[dave@www.cndba.cn ~]# sudo -u hdfs hdfs dfs -rm -r /hbase

28/11/03 23:20:12 INFO fs.TrashPolicyDefault: Moved: 'hdfs://nameservice1/hbase' to trash at: hdfs://nameservice1/user/hdfs/.Trash/Current/hbase

[dave@www.cndba.cn ~]# hdfs dfs -ls /

Found 2 items

drwxrwxrwt - hdfs supergroup 0 2019-04-30 13:45 /tmp

drwxr-xr-x - hdfs supergroup 0 2028-11-03 23:20 /user

[dave@www.cndba.cn ~]#

然后在CDH中配置HBASE,但Hmaster 还是无法启动,查看日志,提示没有/hbase目录,手工创建:

[dave@www.cndba.cn ~]# su - hdfs

[hdfs@hadoop-master2 ~]$ hdfs dfs -mkdir /hbase

[hdfs@hadoop-master2 ~]$ hdfs dfs -ls /

Found 3 items

drwxr-xr-x - hdfs supergroup 0 2028-11-03 23:23 /hbase

drwxrwxrwt - hdfs supergroup 0 2019-04-30 13:45 /tmp

drwxr-xr-x - hdfs supergroup 0 2028-11-03 23:20 /user

这次提示权限不足,又回到之前的问题,使用hdfs命令手工赋权后,启动成功:

Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.AccessControlException): Permission denied: user=hbase, access=WRITE, inode="/hbase":hdfs:supergroup:drwxr-xr-x

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkFsPermission(DefaultAuthorizationProvider.java:279)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:260)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:240)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkPermission(DefaultAuthorizationProvider.java:165)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:152)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3885)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkPermission(FSNamesystem.java:6861)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.deleteInternal(FSNamesystem.java:4290)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.deleteInt(FSNamesystem.java:4245)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.delete(FSNamesystem.java:4229)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.delete(NameNodeRpcServer.java:856)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.delete(AuthorizationProviderProxyClientProtocol.java:313)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.delete(ClientNamenodeProtocolServerSideTranslatorPB.java:626)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2278)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2274)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1924)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2272)

[hdfs@hadoop-master2 ~]$ hdfs dfs -chmod 777 /hbase

[hdfs@hadoop-master2 ~]$ hdfs dfs -ls /hbase

[hdfs@hadoop-master2 ~]$ hdfs dfs -ls /

Found 3 items

drwxrwxrwx - hdfs supergroup 0 2028-11-03 23:23 /hbase

drwxrwxrwt - hdfs supergroup 0 2019-04-30 13:45 /tmp

drwxr-xr-x - hdfs supergroup 0 2028-11-03 23:20 /user

[hdfs@hadoop-master2 ~]$

这里应该是绕了一个弯路,之前赋权就应该可以解决这个问题。 随笔记之。

版权声明:本文为博主原创文章,未经博主允许不得转载。