在之前的博客我们了解Hadoop 数据节点的存储磁盘是单盘使用,并且不做使用的,如下:

Hadoop 集群角色和节点数规划建议

https://www.cndba.cn/dave/article/3372

现在往Hadoop环境里添加磁盘,那么操作如下。 假设新添加的磁盘/dev/sde,这里是裸盘,没有做RAID。 当然有些服务器RAID卡对RAID和非RAID共同的稳定性上有问题,可以考虑对单盘对RAID 0.

首先对磁盘进行分区:

[dave@www.cndba.cn ~]# fdisk /dev/sde

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel with disk identifier 0xff167c3a.

Changes will remain in memory only, until you decide to write them.

After that, of course, the previous content won't be recoverable.

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite)

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (command 'c') and change display units to

sectors (command 'u').

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-3916, default 1):

Using default value 1

Last cylinder, +cylinders or +size{K,M,G} (1-3916, default 3916):

Using default value 3916

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

对磁盘进行格式化,这里使用了ext4类型:

[dave@www.cndba.cn ~]# mkfs.ext4 /dev/sde1

mke2fs 1.41.12 (17-May-2010)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=0 blocks, Stripe width=0 blocks

1966080 inodes, 7863809 blocks

393190 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=4294967296

240 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

This filesystem will be automatically checked every 20 mounts or

180 days, whichever comes first. Use tune2fs -c or -i to override.

创建HDFS文件存储点:

[dave@www.cndba.cn ~]# cd /dfs

[dave@www.cndba.cn dfs]# mkdir sde

[dave@www.cndba.cn dfs]# mkdir sdd

[dave@www.cndba.cn dfs]# mount -a

[dave@www.cndba.cn dfs]# ll

total 20

drwx------. 3 hdfs hadoop 4096 Oct 28 18:40 dn

drwx------ 4 hdfs hadoop 4096 Nov 3 12:02 sdb

drwx------ 4 hdfs hadoop 4096 Nov 3 12:02 sdc

drwxr-xr-x 3 root root 4096 Nov 3 12:25 sdd

drwxr-xr-x 3 root root 4096 Nov 3 12:25 sde

修改挂载点用户权限:

[dave@www.cndba.cn dfs]# chown hdfs:hadoop sd*

[dave@www.cndba.cn dfs]# ll

total 20

drwx------. 3 hdfs hadoop 4096 Oct 28 18:40 dn

drwx------ 4 hdfs hadoop 4096 Nov 3 12:02 sdb

drwx------ 4 hdfs hadoop 4096 Nov 3 12:02 sdc

drwxr-xr-x 3 hdfs hadoop 4096 Nov 3 12:25 sdd

drwxr-xr-x 3 hdfs hadoop 4096 Nov 3 12:25 sde

配置磁盘的自动挂载:

[dave@www.cndba.cn ~]# vim /etc/fstab

#

# /etc/fstab

# Created by anaconda on Tue Oct 24 11:38:30 2028

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/vg_hadoopcm-lv_root / ext4 defaults 1 1

UUID=b13d6e57-bfd9-4959-a811-c36292ef585e /boot ext4 defaults 1 2

/dev/mapper/vg_hadoopcm-lv_swap swap swap defaults 0 0

tmpfs /dev/shm tmpfs defaults 0 0

devpts /dev/pts devpts gid=5,mode=620 0 0

sysfs /sys sysfs defaults 0 0

proc /proc proc defaults 0 0

/dev/sdb1 /dfs/sdb ext4 defaults 0 0

/dev/sdc1 /dfs/sdc ext4 defaults 0 0

/dev/sde1 /dfs/sde ext4 defaults 0 0

/dev/sdd1 /dfs/sdd ext4 defaults 0 0

"/etc/fstab" 19L, 1089C written

挂载硬盘并查看:

[dave@www.cndba.cn ~]# mount –a

[hdfs@hadoop-work2 ~]$ df -lh

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_hadoopcm-lv_root

26G 8.9G 16G 36% /

tmpfs 6.2G 72K 6.2G 1% /dev/shm

/dev/sda1 477M 41M 411M 10% /boot

/dev/sdb1 9.8G 23M 9.2G 1% /dfs/sdb

/dev/sdc1 9.8G 23M 9.2G 1% /dfs/sdc

/dev/sde1 30G 44M 28G 1% /dfs/sde

/dev/sdd1 30G 44M 28G 1% /dfs/sdd

cm_processes 6.2G 2.0M 6.2G 1% /opt/cm-5.16.1/run/cloudera-scm-agent/process

[hdfs@hadoop-work2 ~]$

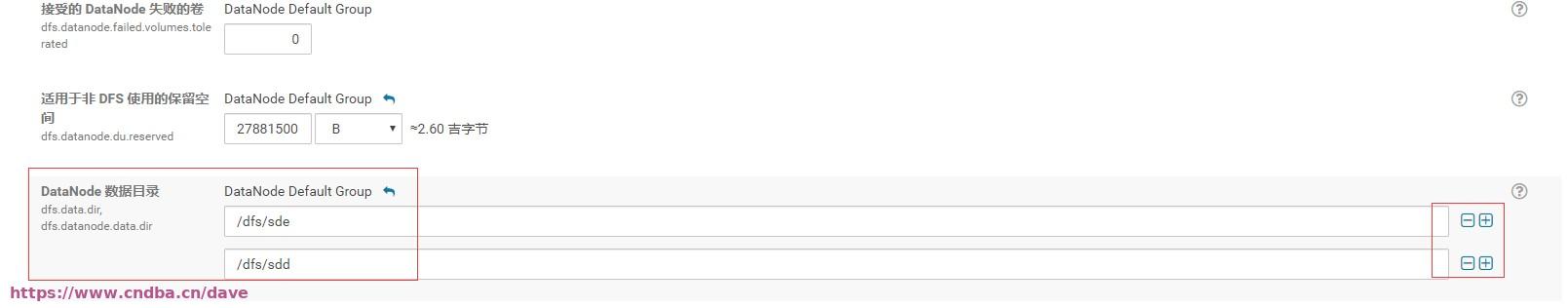

配置完成后在CDH 配置界面修改存储路径,然后重启HDFS即可:

版权声明:本文为博主原创文章,未经博主允许不得转载。