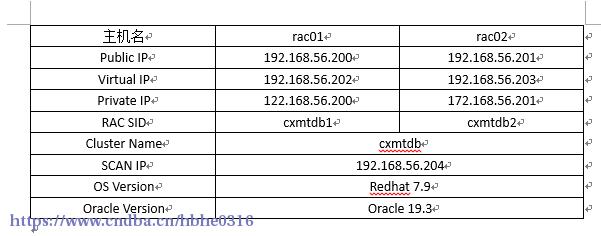

1 配置环境

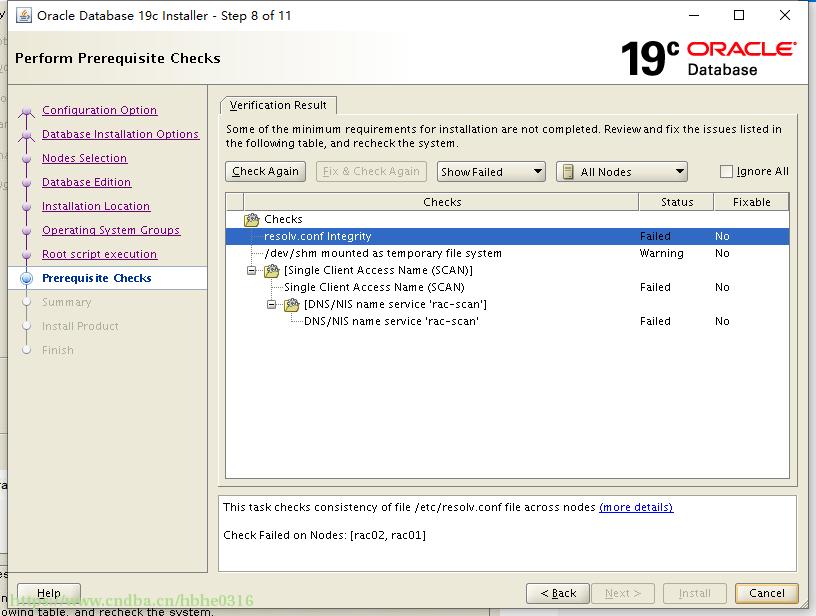

1.1 设置两边服务器/etc/hosts文件

[root@rac01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.56.200 rac01

172.168.56.200 rac01-priv

192.168.56.202 rac01-vip

192.168.56.201 rac02

172.168.56.201 rac02-priv

192.168.56.203 rac02-vip

192.168.56.204 rac-scan

1.2 关闭防火墙

[root@rac01 ~]# systemctl stop firewalld.service

[root@rac01 ~]# systemctl disable firewalld.service

1.3 关闭selinux

[root@rac01 ~]# sed -i "s/SELINUX=enforcing/SELINUX=disabled/" /etc/selinux/config

[root@rac01 ~]# setenforce 0

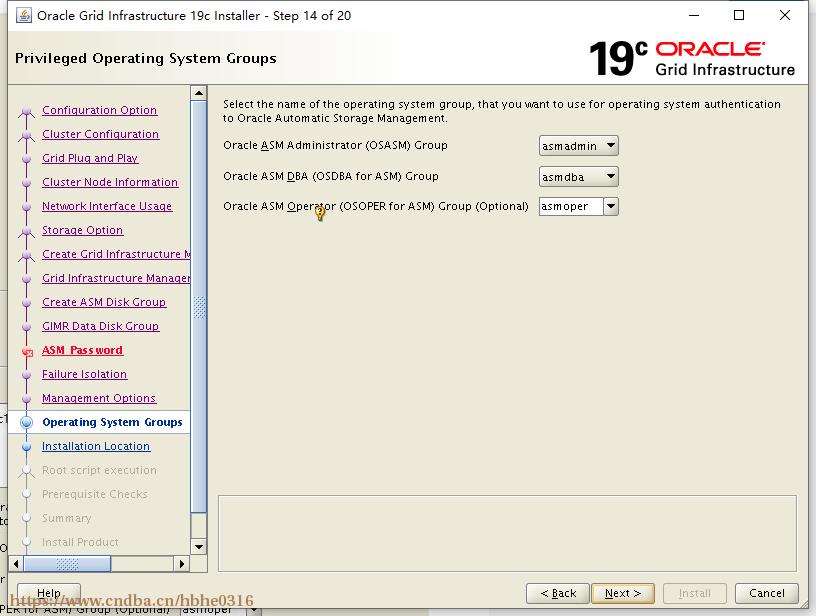

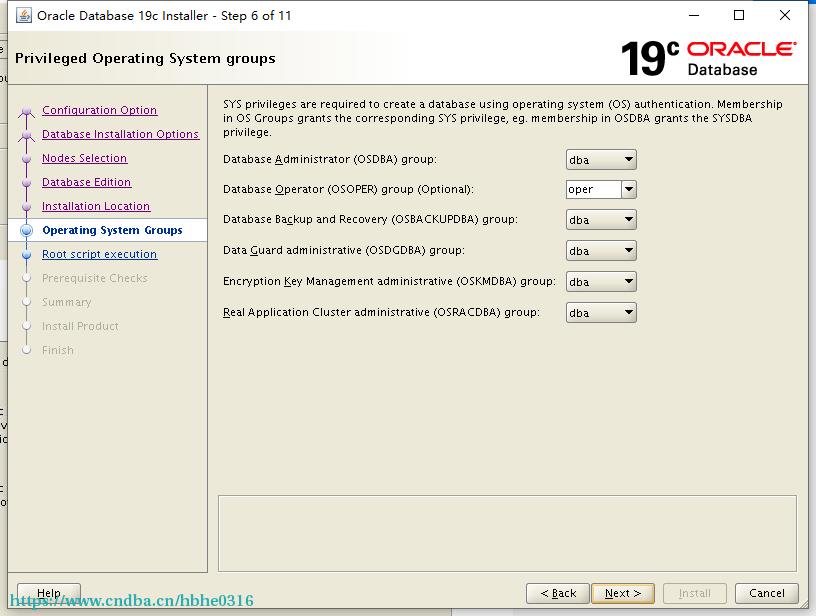

1.4 添加用户和组

[root@rac01 ~]# /usr/sbin/groupadd -g 54321 oinstall

[root@rac01 ~]# /usr/sbin/groupadd -g 54322 dba

[root@rac01 ~]# /usr/sbin/groupadd -g 54323 oper

[root@rac01 ~]# /usr/sbin/groupadd -g 54324 backupdba

[root@rac01 ~]# /usr/sbin/groupadd -g 54325 dgdba

[root@rac01 ~]# /usr/sbin/groupadd -g 54326 kmdba

[root@rac01 ~]# /usr/sbin/groupadd -g 54327 asmdba

[root@rac01 ~]# /usr/sbin/groupadd -g 54328 asmoper

[root@rac01 ~]# /usr/sbin/groupadd -g 54329 asmadmin

[root@rac01 ~]# /usr/sbin/groupadd -g 54330 racdba

[root@rac01 ~]# /usr/sbin/useradd -u 54321 -g oinstall -G dba,asmdba,oper oracle

[root@rac01 ~]# /usr/sbin/useradd -u 54322 -g oinstall -G dba,oper,backupdba,dgdba,kmdba,asmdba,asmoper,asmadmin,racdba grid

[root@rac01 ~]# echo "wwwwww" | passwd --stdin oracle

[root@rac01 ~]# echo "wwwwww" | passwd --stdin grid

1.5 关闭禁用透明大页

[root@rac01 ~]# echo 'GRUB_CMDLINE_LINUX="transparent_hugepage=never"' >> /etc/default/grub

[root@rac01 ~]# grub2-mkconfig -o /boot/grub2/grub.cfg

Generating grub configuration file ...

Found linux image: /boot/vmlinuz-3.10.0-1160.el7.x86_64

Found initrd image: /boot/initramfs-3.10.0-1160.el7.x86_64.img

Found linux image: /boot/vmlinuz-0-rescue-63924c89eb3e004a8ca3414e737ac5cd

Found initrd image: /boot/initramfs-0-rescue-63924c89eb3e004a8ca3414e737ac5cd.img

done

[root@rac01 ~]# grub2-mkconfig -o /boot/efi/EFI/redhat/grub.cfg

Generating grub configuration file ...

Found linux image: /boot/vmlinuz-3.10.0-1160.el7.x86_64

Found initrd image: /boot/initramfs-3.10.0-1160.el7.x86_64.img

Found linux image: /boot/vmlinuz-0-rescue-63924c89eb3e004a8ca3414e737ac5cd

Found initrd image: /boot/initramfs-0-rescue-63924c89eb3e004a8ca3414e737ac5cd.img

done

[root@rac01 ~]# reboot

[root@rac01 ~]# cat /proc/cmdline

BOOT_IMAGE=/vmlinuz-3.10.0-1160.el7.x86_64 root=/dev/mapper/rhel-root ro transparent_hugepage=never

[root@rac01 ~]# grep Huge /proc/meminfo

AnonHugePages: 0 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

1.6 配置标准大页

https://www.cndba.cn/hbhe0316/article/4719

1.7 禁用chronyd

[root@rac01 oracle]# systemctl stop chronyd

[root@rac01 oracle]# systemctl disable chronyd

[root@rac01 oracle]# mv /etc/chrony.conf /etc/chrony.bak

1.8 关闭avahi-daemon

[root@rac01 ~]# systemctl stop avahi-daemon

[root@rac01 ~]# systemctl disable avahi-daemon

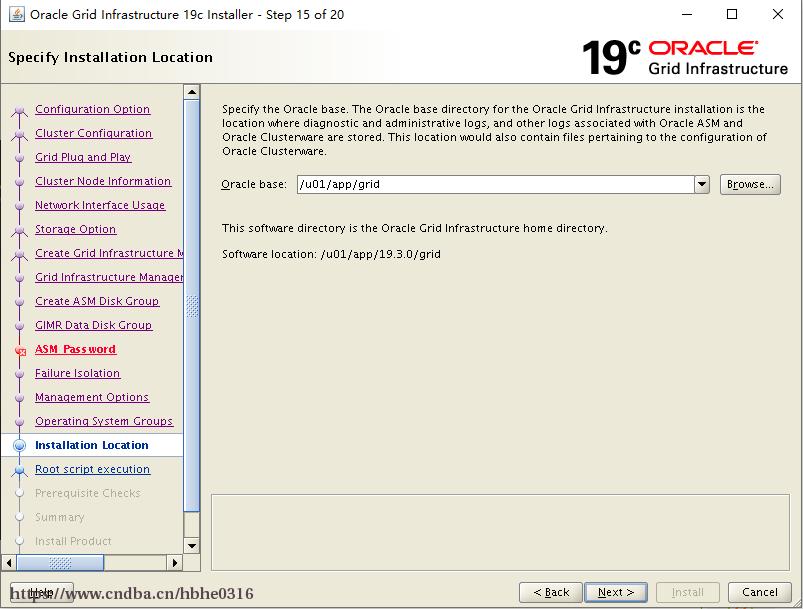

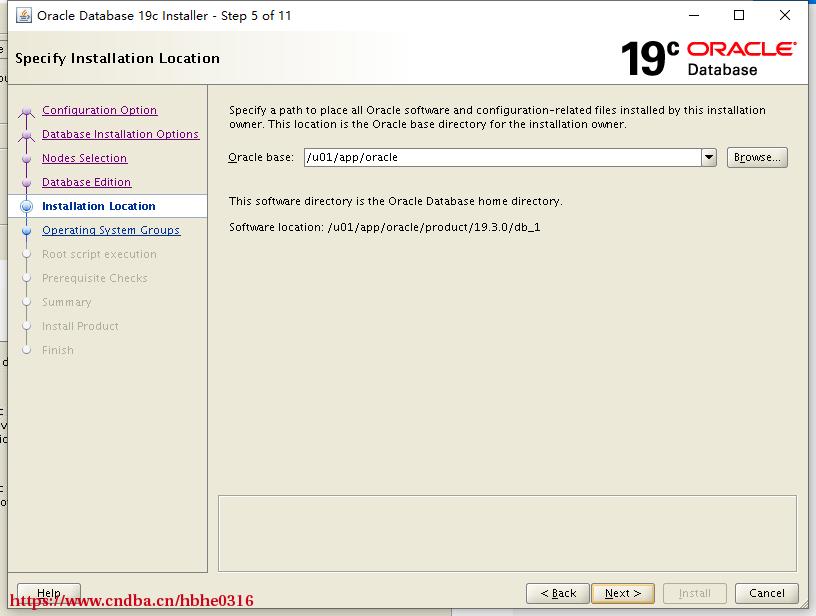

1.9 创建目录

[root@rac01 oracle]# mkdir -p /u01/app/19.3.0/grid

[root@rac01 oracle]# mkdir -p /u01/app/grid

[root@rac01 oracle]# mkdir -p /u01/app/oracle/product/19.3.0/db_1

[root@rac01 oracle]# chown -R grid:oinstall /u01

[root@rac01 oracle]# chown -R oracle:oinstall /u01/app/oracle

[root@rac01 oracle]# chmod -R 775 /u01/

1.10 配置oracle用户环境变量

[root@rac01 oracle]# vi /home/oracle/.bash_profile

ORACLE_SID=cxmtdb1;export ORACLE_SID

ORACLE_UNQNAME=cxmtdb;export ORACLE_UNQNAME

JAVA_HOME=/usr/local/java; export JAVA_HOME

ORACLE_BASE=/u01/app/oracle; export ORACLE_BASE

ORACLE_HOME=$ORACLE_BASE/product/19.3.0/db_1; export ORACLE_HOME

ORACLE_TERM=xterm; export ORACLE_TERM

NLS_DATE_FORMAT="YYYY:MM:DDHH24:MI:SS"; export NLS_DATE_FORMAT

NLS_LANG=american_america.ZHS16GBK; export NLS_LANG

TNS_ADMIN=$ORACLE_HOME/network/admin; export TNS_ADMIN

ORA_NLS11=$ORACLE_HOME/nls/data; export ORA_NLS11

PATH=.:${JAVA_HOME}/bin:${PATH}:$HOME/bin:$ORACLE_HOME/bin:$ORA_CRS_HOME/bin

PATH=${PATH}:/usr/bin:/bin:/usr/bin/X11:/usr/local/bin

export PATH

LD_LIBRARY_PATH=$ORACLE_HOME/lib

LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:$ORACLE_HOME/oracm/lib

LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:/lib:/usr/lib:/usr/local/lib

export LD_LIBRARY_PATH

CLASSPATH=$ORACLE_HOME/JRE

CLASSPATH=${CLASSPATH}:$ORACLE_HOME/jlib

CLASSPATH=${CLASSPATH}:$ORACLE_HOME/rdbms/jlib

CLASSPATH=${CLASSPATH}:$ORACLE_HOME/network/jlib

export CLASSPATH

THREADS_FLAG=native; export THREADS_FLAG

export TEMP=/tmp

export TMPDIR=/tmp

umask 022

1.11 配置grid用户环境变量

[root@rac01 oracle]# vi /home/grid/.bash_profile

PATH=$PATH:$HOME/bin

export ORACLE_SID=+ASM1

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/19.3.0/grid

export PATH=$ORACLE_HOME/bin:$PATH:/usr/local/bin/:.

export TEMP=/tmp

export TMP=/tmp

export TMPDIR=/tmp

umask 022

export PATH

1.12 修改资源限制

[root@rac01 oracle]# cat >> /etc/security/limits.conf <<EOF

grid soft nproc 2047

grid hard nproc 16384

grid soft nofile 1024

grid hard nofile 65536

grid soft stack 10240

grid hard stack 32768

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft stack 10240

oracle hard stack 32768

oracle soft memlock 3145728

oracle hard memlock 3145728

EOF

1.13 设置PAM

[root@rac01 oracle]# cat >> /etc/pam.d/login <<EOF

session required pam_limits.so

EOF

1.14 配置NOZEROCONF

[root@rac01 oracle]# echo "NOZEROCONF=yes" >>/etc/sysconfig/network

1.15 修改内核参数

[root@rac01 oracle]# cat >> /etc/sysctl.d/sysctl.conf <<EOF

fs.file-max = 6815744

kernel.sem = 250 32000 100 128

kernel.shmmni = 4096

kernel.shmall = 1073741824

kernel.shmmax = 4398046511104

kernel.panic_on_oops = 1

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

net.ipv4.conf.all.rp_filter = 2

net.ipv4.conf.default.rp_filter = 2

fs.aio-max-nr = 1048576

net.ipv4.ip_local_port_range = 9000 65500

EOF

[root@rac01 oracle]# sysctl -p

1.16 安装rpm包

[root@rac01 oracle]# cat /etc/yum.repos.d/rhel79.repo

[rhel7]

name=base

baseurl=ftp://192.168.56.199/pub/rhel79

enabled=1

gpgcheck=0

[root@rac01 oracle]# yum install binutils compat-libstdc++-33 gcc gcc-c++ glibc glibc.i686 glibc-devel ksh libgcc.i686 libstdc++-devel libaio libaio.i686 libaio-devel libaio-devel.i686 libXext libXext.i686 libXtst libXtst.i686 libX11 libX11.i686 libXau libXau.i686 libxcb libxcb.i686 libXi libXi.i686 make sysstat unixODBC unixODBC-devel zlib-devel zlib-devel.i686 compat-libcap1 -y

[root@rac01 oracle]# rpm -ivh /home/compat-libstdc++-33-3.2.3-72.el7.x86_64.rpm

1.17 配置grid用户等价性

1)在主节点rac01上以grid用户身份生成用户的公匙和私匙

[root@rac01 /]# su - grid

[grid@rac01 ~]$ mkdir ~/.ssh

[grid@rac01 ~]$ ssh-keygen -t rsa

[grid@rac01 ~]$ ssh-keygen -t dsa

2)在副节点rac02上执行相同的操作,确保通信无阻

[root@rac02 /]# su - grid

[grid@rac02 ~]$ mkdir ~/.ssh

[grid@rac02 ~]$ ssh-keygen -t rsa

[grid@rac02 ~]$ ssh-keygen -t dsa

3)在主节点rac01上grid用户执行以下操作

[grid@rac01 ~]$ cat ~/.ssh/id_rsa.pub >> ./.ssh/authorized_keys

[grid@rac01 ~]$ cat ~/.ssh/id_dsa.pub >> ./.ssh/authorized_keys

[grid@rac01 ~]$ ssh rac02 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[grid@rac01 ~]$ ssh rac02 cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

[grid@rac01 ~]$ scp ~/.ssh/authorized_keys rac02:~/.ssh/authorized_keys

4)主节点rac01上执行检验操作

[grid@rac01 ~]$ ssh rac01 date

[grid@rac01 ~]$ ssh rac02 date

5)在副节点rac02上执行检验操作

[grid@rac02 ~]$ ssh rac01 date

[grid@rac02 ~]$ ssh rac02 date

1.18 配置oracle用户等价性

1)在主节点rac01上以oracle用户身份生成用户的公匙和私匙

[root@rac01 /]# su - oracle

[oracle@rac01 ~]$ mkdir ~/.ssh

[oracle@rac01 ~]$ ssh-keygen -t rsa

[oracle@rac01 ~]$ ssh-keygen -t dsa

2)在副节点rac02上执行相同的操作,确保通信无阻

[root@rac02 /]# su - oracle

[oracle@rac02 ~]$ mkdir ~/.ssh

[oracle@rac02 ~]$ ssh-keygen -t rsa

[oracle@rac02 ~]$ ssh-keygen -t dsa

3)在主节点rac01上oracle用户执行以下操作

[oracle@rac01 ~]$ cat ~/.ssh/id_rsa.pub >> ./.ssh/authorized_keys

[oracle@rac01 ~]$ cat ~/.ssh/id_dsa.pub >> ./.ssh/authorized_keys

[oracle@rac01 ~]$ ssh rac02 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[oracle@rac01 ~]$ ssh rac02 cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

[oracle@rac01 ~]$ scp ~/.ssh/authorized_keys rac02:~/.ssh/authorized_keys

4)主节点rac01上执行检验操作

[oracle@rac01 ~]$ ssh rac01 date

[oracle@rac01 ~]$ ssh rac02 date

5)在副节点rac02上执行检验操作

[oracle@rac02 ~]$ ssh rac01 date

[oracle@rac02 ~]$ ssh rac02 date

1.19 配置共享文件

for i in b c d e f g;

do

echo "KERNEL==/"sd*/",ENV{DEVTYPE}==/"disk/",SUBSYSTEM==/"block/",PROGRAM==/"/usr/lib/udev/scsi_id -g -u -d /$devnode/",RESULT==/"`/usr/lib/udev/scsi_id -g -u /dev/sd$i`/", RUN+=/"/bin/sh -c 'mknod /dev/asmdisk$i b /$major /$minor; chown grid:asmadmin /dev/asmdisk$i; chmod 0660 /dev/asmdisk$i'/""

done

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /",RESULT=="1ATA_VBOX_HARDDISK_VBa5ab3bca-9c189b9f", RUN+="/bin/sh -c 'mknod /dev/asmdiskb b / /; chown grid:asmadmin /dev/asmdiskb; chmod 0660 /dev/asmdiskb'"

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /",RESULT=="1ATA_VBOX_HARDDISK_VB17b12e03-424103ce", RUN+="/bin/sh -c 'mknod /dev/asmdiskc b / /; chown grid:asmadmin /dev/asmdiskc; chmod 0660 /dev/asmdiskc'"

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /",RESULT=="1ATA_VBOX_HARDDISK_VB3f81802d-73cb18e9", RUN+="/bin/sh -c 'mknod /dev/asmdiskd b / /; chown grid:asmadmin /dev/asmdiskd; chmod 0660 /dev/asmdiskd'"

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /",RESULT=="1ATA_VBOX_HARDDISK_VB4012909d-8d5bac83", RUN+="/bin/sh -c 'mknod /dev/asmdiske b / /; chown grid:asmadmin /dev/asmdiske; chmod 0660 /dev/asmdiske'"

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /",RESULT=="1ATA_VBOX_HARDDISK_VB5ce61d79-a21a8b8a", RUN+="/bin/sh -c 'mknod /dev/asmdiskf b / /; chown grid:asmadmin /dev/asmdiskf; chmod 0660 /dev/asmdiskf'"

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /",RESULT=="1ATA_VBOX_HARDDISK_VB74167846-a84de8d0", RUN+="/bin/sh -c 'mknod /dev/asmdiskg b / /; chown grid:asmadmin /dev/asmdiskg; chmod 0660 /dev/asmdiskg'"

[root@rac01 ~]# cat /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="1ATA_VBOX_HARDDISK_VBa5ab3bca-9c189b9f", RUN+="/bin/sh -c 'mknod /dev/asmdiskb b $major $minor; chown grid:asmadmin /dev/asmdiskb; chmod 0660 /dev/asmdiskb'"

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="1ATA_VBOX_HARDDISK_VB17b12e03-424103ce", RUN+="/bin/sh -c 'mknod /dev/asmdiskc b $major $minor; chown grid:asmadmin /dev/asmdiskc; chmod 0660 /dev/asmdiskc'"

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="1ATA_VBOX_HARDDISK_VB3f81802d-73cb18e9", RUN+="/bin/sh -c 'mknod /dev/asmdiskd b $major $minor; chown grid:asmadmin /dev/asmdiskd; chmod 0660 /dev/asmdiskd'"

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="1ATA_VBOX_HARDDISK_VB4012909d-8d5bac83", RUN+="/bin/sh -c 'mknod /dev/asmdiske b $major $minor; chown grid:asmadmin /dev/asmdiske; chmod 0660 /dev/asmdiske'"

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="1ATA_VBOX_HARDDISK_VB5ce61d79-a21a8b8a", RUN+="/bin/sh -c 'mknod /dev/asmdiskf b $major $minor; chown grid:asmadmin /dev/asmdiskf; chmod 0660 /dev/asmdiskf'"

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="1ATA_VBOX_HARDDISK_VB74167846-a84de8d0", RUN+="/bin/sh -c 'mknod /dev/asmdiskg b $major $minor; chown grid:asmadmin /dev/asmdiskg; chmod 0660 /dev/asmdiskg'"

[root@rac01 ~]# /sbin/udevadm trigger --type=devices --action=change

[root@rac01 ~]# ll /dev/asm*

brw-rw---- 1 grid asmadmin 8, 16 Sep 19 20:27 /dev/asmdiskb

brw-rw---- 1 grid asmadmin 8, 32 Sep 19 20:27 /dev/asmdiskc

brw-rw---- 1 grid asmadmin 8, 48 Sep 19 20:27 /dev/asmdiskd

brw-rw---- 1 grid asmadmin 8, 64 Sep 19 20:27 /dev/asmdiske

brw-rw---- 1 grid asmadmin 8, 80 Sep 19 20:27 /dev/asmdiskf

brw-rw---- 1 grid asmadmin 8, 96 Sep 19 20:27 /dev/asmdiskg

[root@rac01 rules.d]# scp /etc/udev/rules.d/99-oracle-asmdevices.rules rac02:/etc/udev/rules.d/

[root@rac02 ~]# /sbin/udevadm trigger --type=devices --action=change

[root@rac02 ~]# ll /dev/as*

brw-rw---- 1 grid asmadmin 8, 16 Sep 19 20:29 /dev/asmdiskb

brw-rw---- 1 grid asmadmin 8, 32 Sep 19 20:29 /dev/asmdiskc

brw-rw---- 1 grid asmadmin 8, 48 Sep 19 20:29 /dev/asmdiskd

brw-rw---- 1 grid asmadmin 8, 64 Sep 19 20:29 /dev/asmdiske

brw-rw---- 1 grid asmadmin 8, 80 Sep 19 20:29 /dev/asmdiskf

brw-rw---- 1 grid asmadmin 8, 96 Sep 19 20:29 /dev/asmdiskg

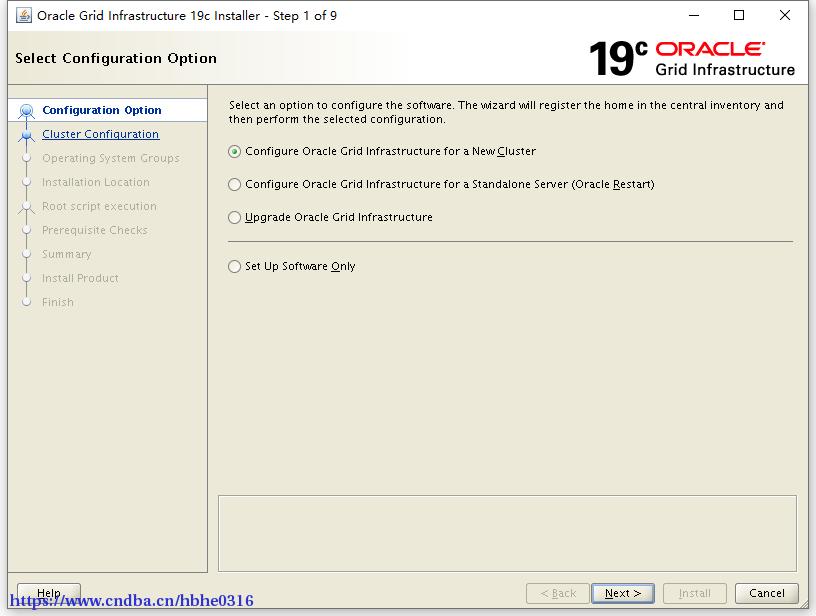

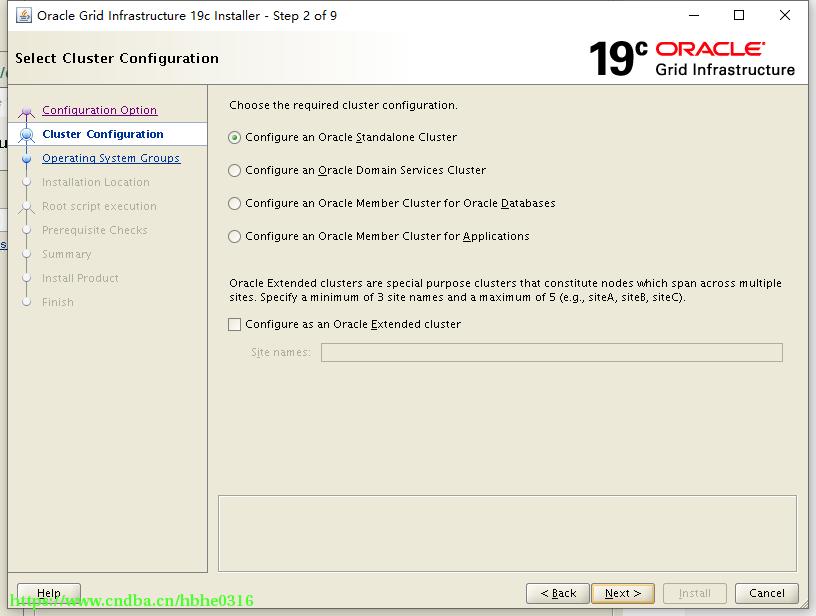

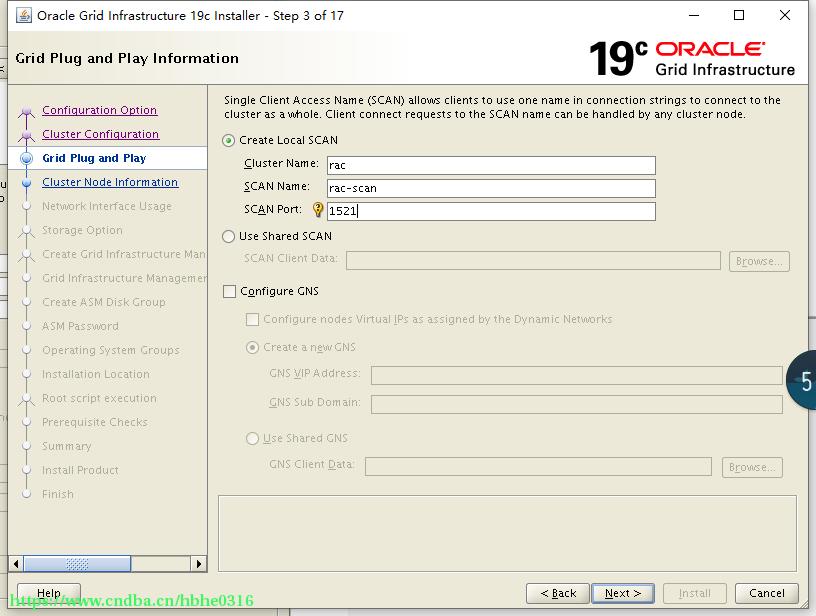

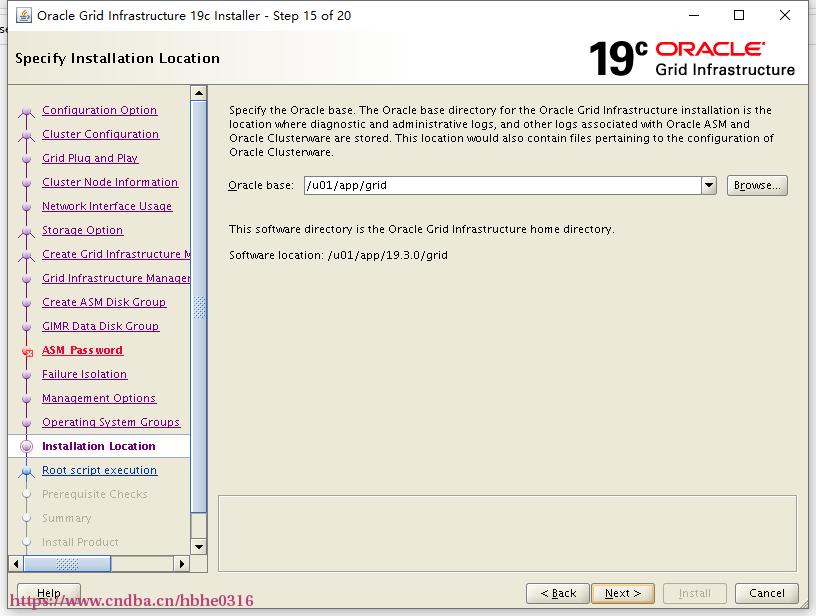

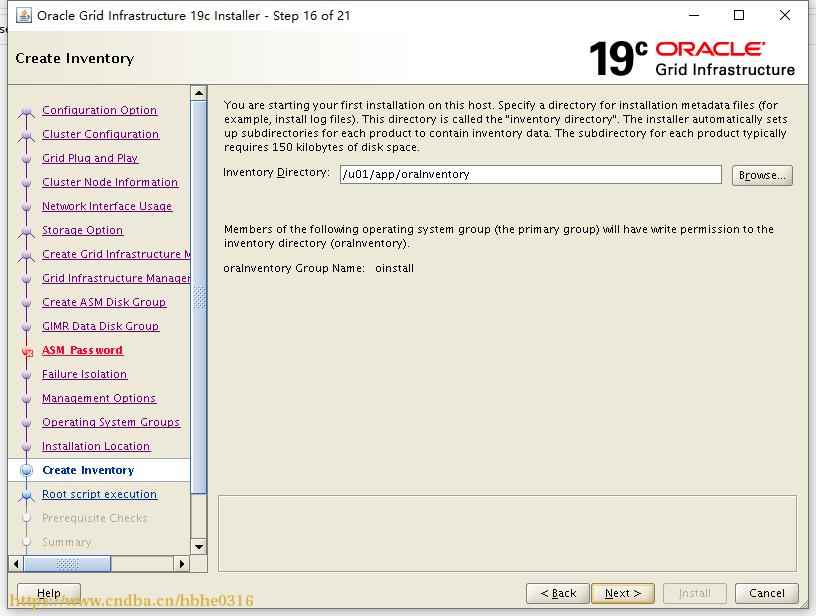

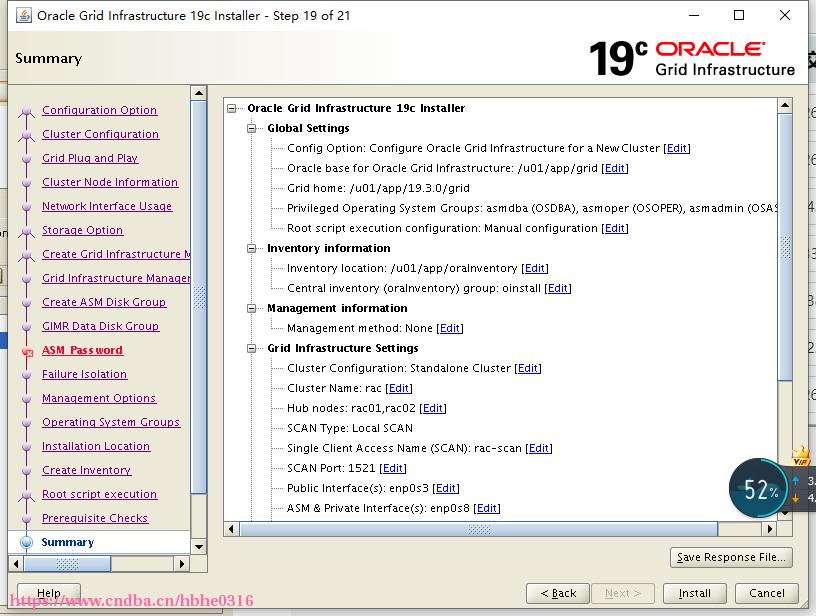

2 安装GRID

[grid@rac01 tmp]$ unzip -d /u01/app/19.3.0/grid/ /tmp/LINUX.X64_193000_grid_home.zip

[root@rac01 ~]# rpm -ivh /u01/app/19.3.0/grid/cv/rpm/cvuqdisk-1.0.10-1.rpm

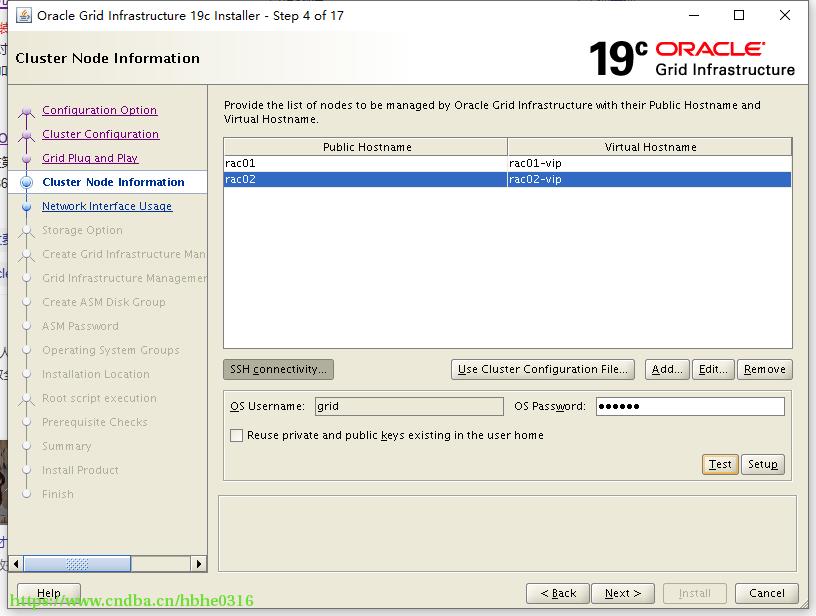

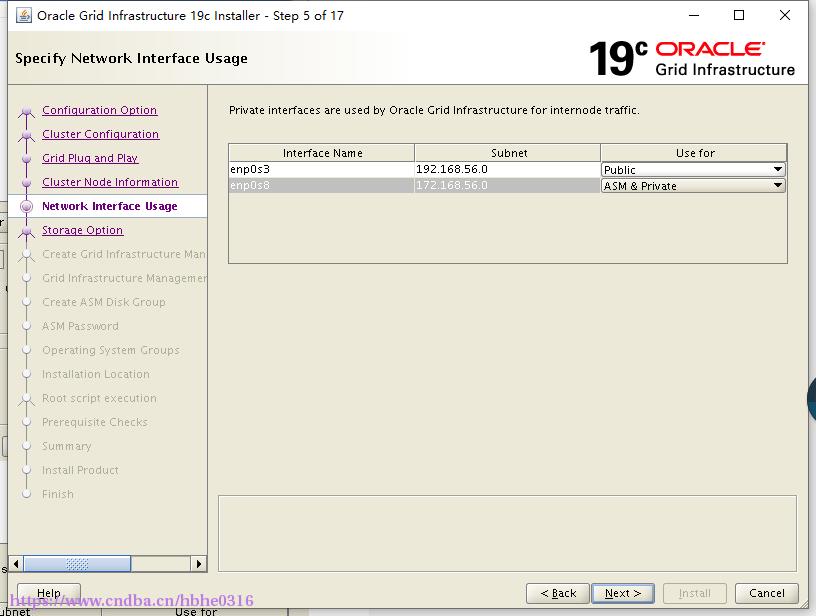

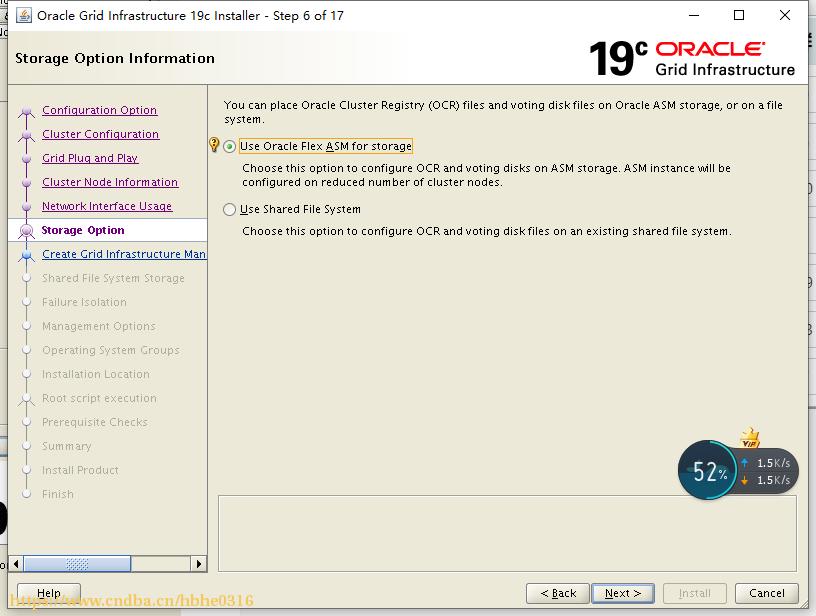

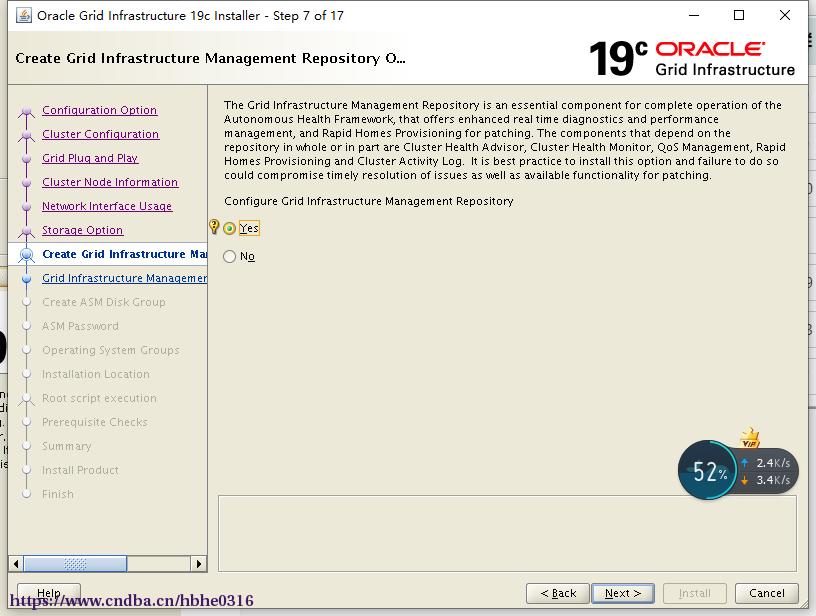

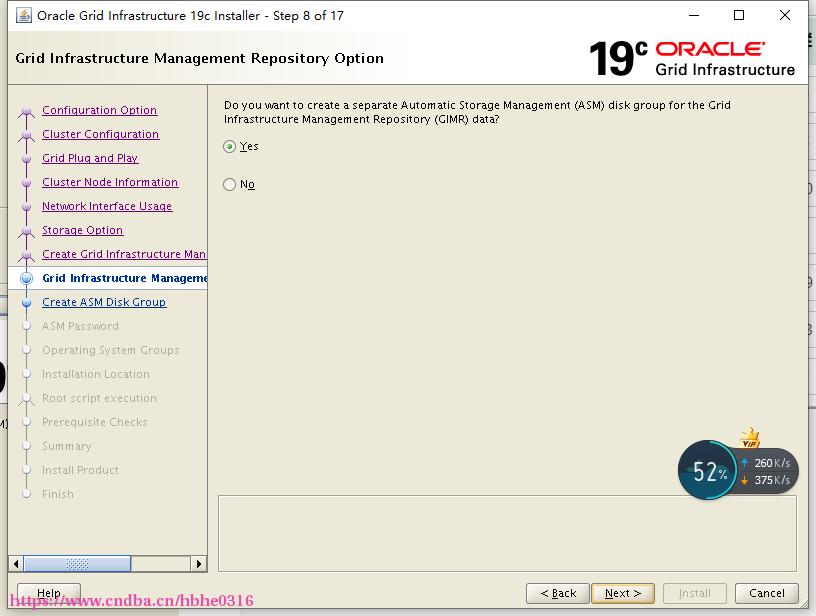

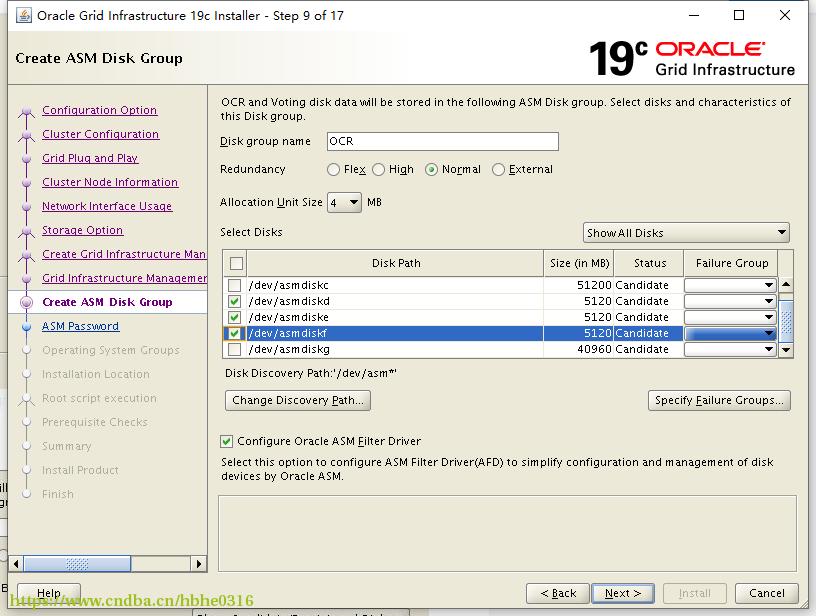

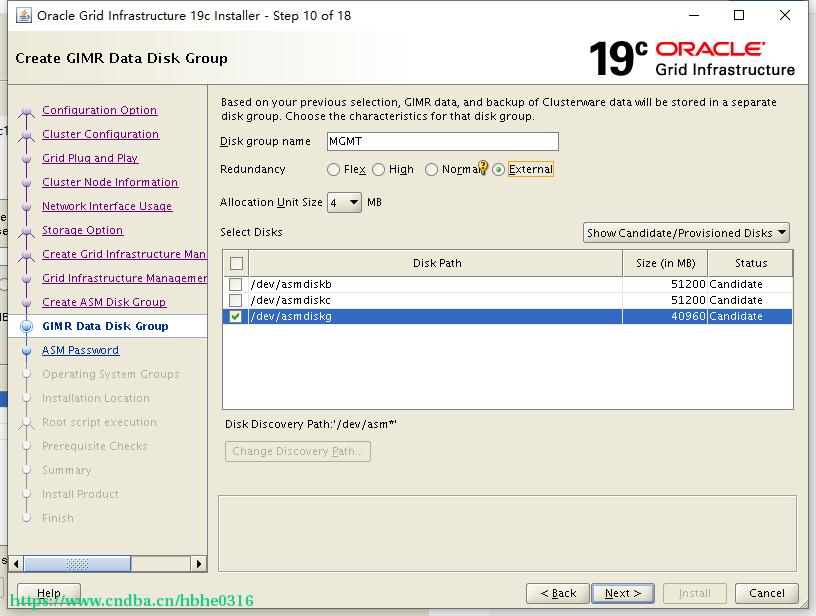

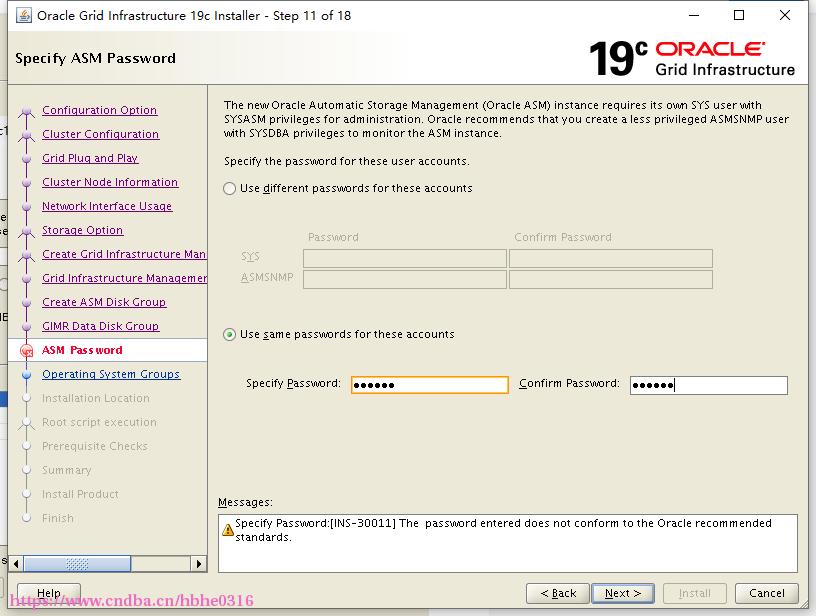

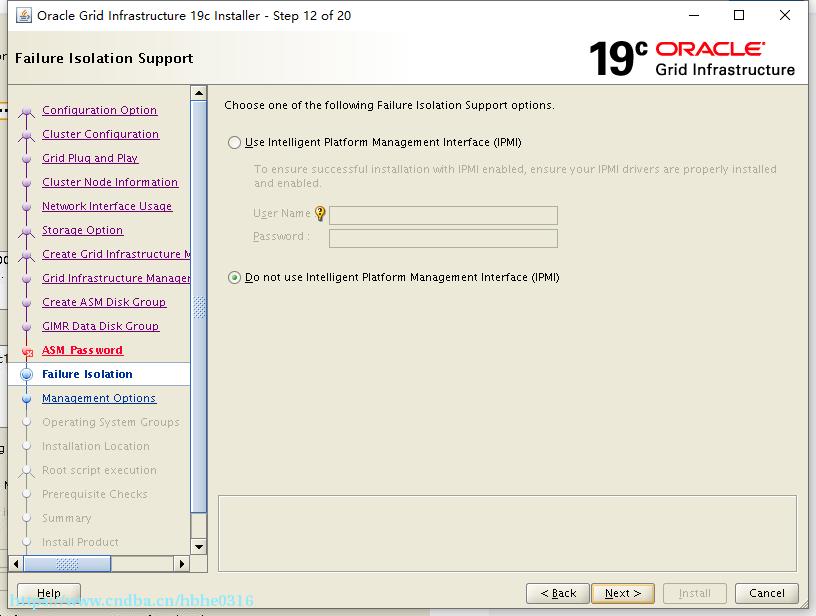

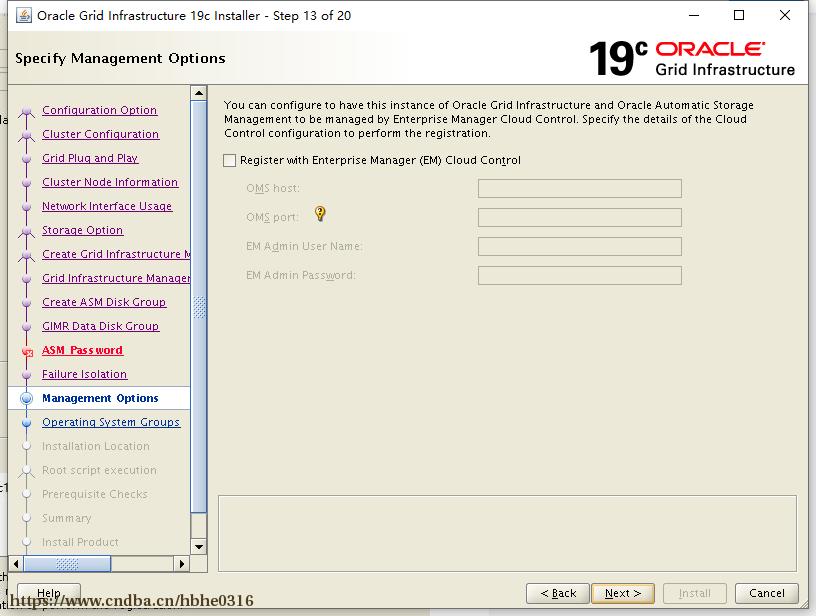

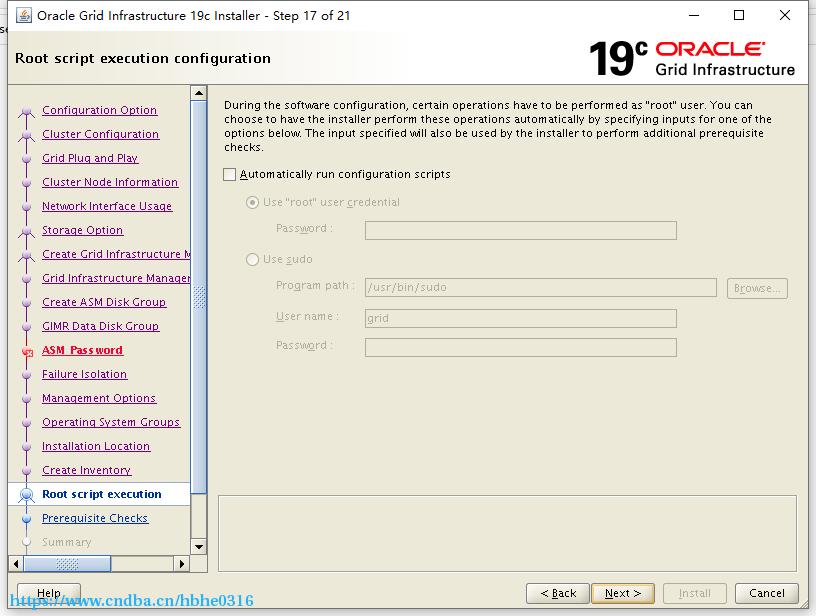

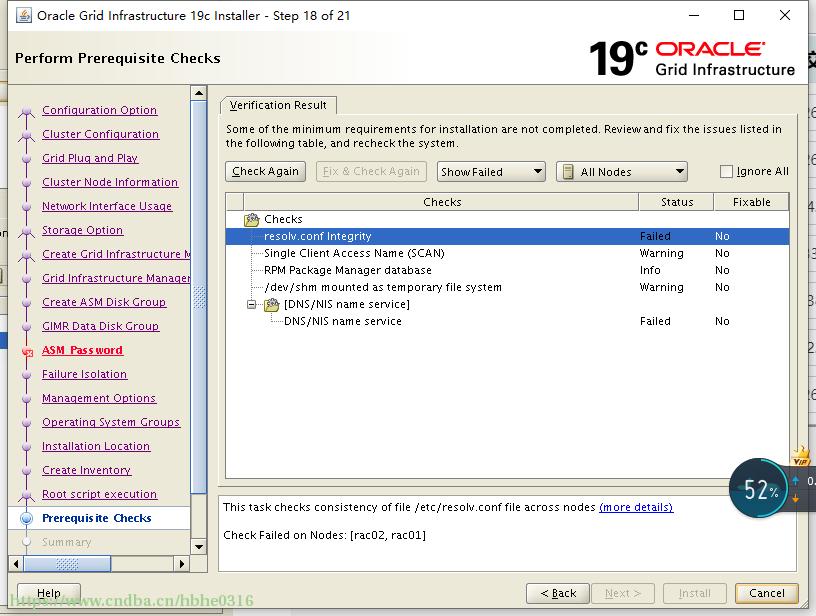

运行安装脚本gridSetup.sh

[grid@rac01 tmp]$ cd /u01/app/19.3.0/grid/

[grid@rac01 grid]$ ./gridSetup.sh

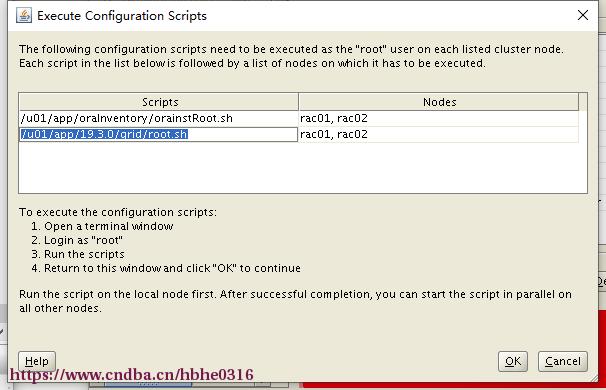

[root@rac01 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@rac01 ~]# /u01/app/19.3.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/19.3.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/19.3.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/rac01/crsconfig/rootcrs_rac01_2021-09-19_09-53-21PM.log

2021/09/19 21:53:39 CLSRSC-594: Executing installation step 1 of 19: 'SetupTFA'.

2021/09/19 21:53:39 CLSRSC-594: Executing installation step 2 of 19: 'ValidateEnv'.

2021/09/19 21:53:39 CLSRSC-363: User ignored prerequisites during installation

2021/09/19 21:53:39 CLSRSC-594: Executing installation step 3 of 19: 'CheckFirstNode'.

2021/09/19 21:53:43 CLSRSC-594: Executing installation step 4 of 19: 'GenSiteGUIDs'.

2021/09/19 21:53:44 CLSRSC-594: Executing installation step 5 of 19: 'SetupOSD'.

2021/09/19 21:53:44 CLSRSC-594: Executing installation step 6 of 19: 'CheckCRSConfig'.

2021/09/19 21:53:45 CLSRSC-594: Executing installation step 7 of 19: 'SetupLocalGPNP'.

2021/09/19 21:54:01 CLSRSC-594: Executing installation step 8 of 19: 'CreateRootCert'.

2021/09/19 21:54:06 CLSRSC-594: Executing installation step 9 of 19: 'ConfigOLR'.

2021/09/19 21:54:10 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

2021/09/19 21:54:22 CLSRSC-594: Executing installation step 10 of 19: 'ConfigCHMOS'.

2021/09/19 21:54:23 CLSRSC-594: Executing installation step 11 of 19: 'CreateOHASD'.

2021/09/19 21:54:29 CLSRSC-594: Executing installation step 12 of 19: 'ConfigOHASD'.

2021/09/19 21:54:30 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.service'

2021/09/19 21:55:51 CLSRSC-594: Executing installation step 13 of 19: 'InstallAFD'.

2021/09/19 21:56:48 CLSRSC-594: Executing installation step 14 of 19: 'InstallACFS'.

2021/09/19 21:57:55 CLSRSC-594: Executing installation step 15 of 19: 'InstallKA'.

2021/09/19 21:58:02 CLSRSC-594: Executing installation step 16 of 19: 'InitConfig'.

[INFO] [DBT-30161] Disk label(s) created successfully. Check /u01/app/grid/cfgtoollogs/asmca/asmca-210919PM095839.log for details.

2021/09/19 22:00:02 CLSRSC-482: Running command: '/u01/app/19.3.0/grid/bin/ocrconfig -upgrade grid oinstall'

CRS-4256: Updating the profile

Successful addition of voting disk 5b32d1d5549e4faabf9c5755045a05bc.

Successful addition of voting disk d4565abd962b4ffebfba378cfb2f7a26.

Successful addition of voting disk 6c7aa4c7d5c94f1dbfdd2ee8f189a9da.

Successfully replaced voting disk group with +OCR.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 5b32d1d5549e4faabf9c5755045a05bc (AFD:OCR1) [OCR]

2. ONLINE d4565abd962b4ffebfba378cfb2f7a26 (AFD:OCR2) [OCR]

3. ONLINE 6c7aa4c7d5c94f1dbfdd2ee8f189a9da (AFD:OCR3) [OCR]

Located 3 voting disk(s).

2021/09/19 22:01:54 CLSRSC-594: Executing installation step 17 of 19: 'StartCluster'.

2021/09/19 22:03:12 CLSRSC-343: Successfully started Oracle Clusterware stack

2021/09/19 22:03:12 CLSRSC-594: Executing installation step 18 of 19: 'ConfigNode'.

2021/09/19 22:05:02 CLSRSC-594: Executing installation step 19 of 19: 'PostConfig'.

[INFO] [DBT-30161] Disk label(s) created successfully. Check /u01/app/grid/cfgtoollogs/asmca/asmca-210919PM100510.log for details.

[INFO] [DBT-30001] Disk groups created successfully. Check /u01/app/grid/cfgtoollogs/asmca/asmca-210919PM100510.log for details.

2021/09/19 22:07:18 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

在节点2,会有如下如下,可忽略。

Error 4 opening dom ASM/Self in 0x4516ca0

Domain name to open is ASM/Self

Error 4 opening dom ASM/Self in 0x4516ca0

[grid@rac01 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE rac01 STABLE

ONLINE ONLINE rac02 STABLE

ora.MGMT.GHCHKPT.advm

OFFLINE OFFLINE rac01 STABLE

OFFLINE OFFLINE rac02 STABLE

ora.chad

ONLINE ONLINE rac01 STABLE

ONLINE ONLINE rac02 STABLE

ora.helper

OFFLINE OFFLINE rac01 STABLE

OFFLINE OFFLINE rac02 IDLE,STABLE

ora.mgmt.ghchkpt.acfs

OFFLINE OFFLINE rac01 volume /opt/oracle/r

hp_images/chkbase is

unmounted,STABLE

OFFLINE OFFLINE rac02 STABLE

ora.net1.network

ONLINE ONLINE rac01 STABLE

ONLINE ONLINE rac02 STABLE

ora.ons

ONLINE ONLINE rac01 STABLE

ONLINE ONLINE rac02 STABLE

ora.proxy_advm

OFFLINE OFFLINE rac01 STABLE

OFFLINE OFFLINE rac02 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE rac01 STABLE

2 ONLINE ONLINE rac02 STABLE

3 OFFLINE OFFLINE STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac01 STABLE

ora.MGMT.dg(ora.asmgroup)

1 ONLINE ONLINE rac01 STABLE

2 ONLINE ONLINE rac02 STABLE

3 OFFLINE OFFLINE STABLE

ora.MGMTLSNR

1 OFFLINE OFFLINE STABLE

ora.OCR.dg(ora.asmgroup)

1 ONLINE ONLINE rac01 STABLE

2 ONLINE ONLINE rac02 STABLE

3 OFFLINE OFFLINE STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE rac01 Started,STABLE

2 ONLINE ONLINE rac02 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE rac01 STABLE

2 ONLINE ONLINE rac02 STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE rac01 STABLE

ora.qosmserver

1 ONLINE ONLINE rac01 STABLE

ora.rac01.vip

1 ONLINE ONLINE rac01 STABLE

ora.rac02.vip

1 ONLINE ONLINE rac02 STABLE

ora.rhpserver

1 OFFLINE OFFLINE STABLE

ora.scan1.vip

1 ONLINE ONLINE rac01 STABLE

--------------------------------------------------------------------------------

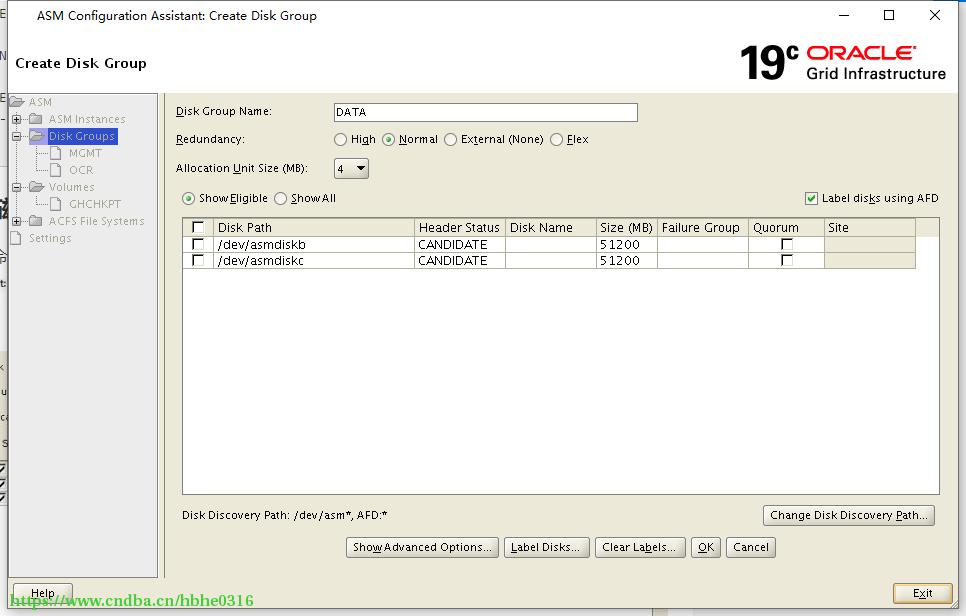

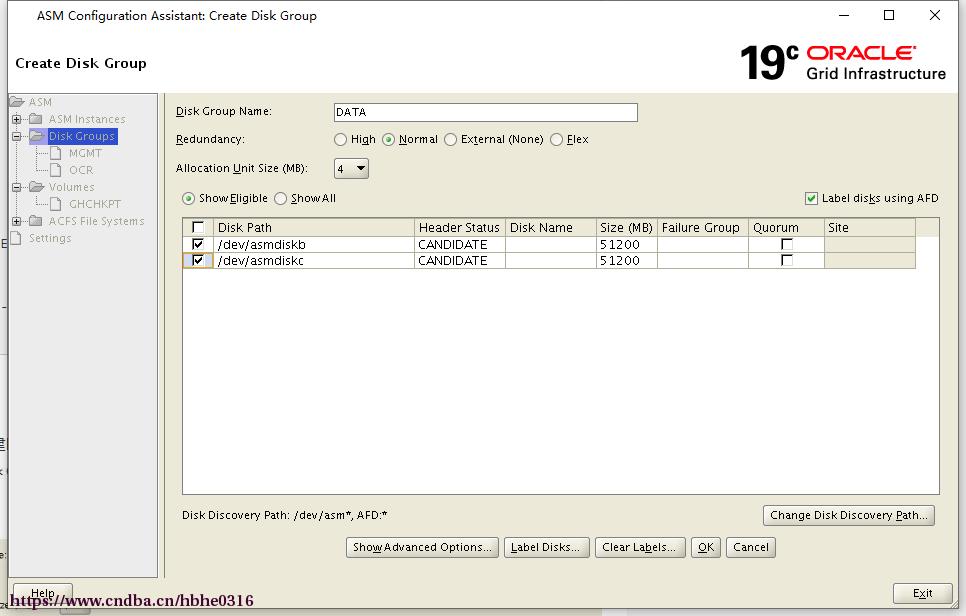

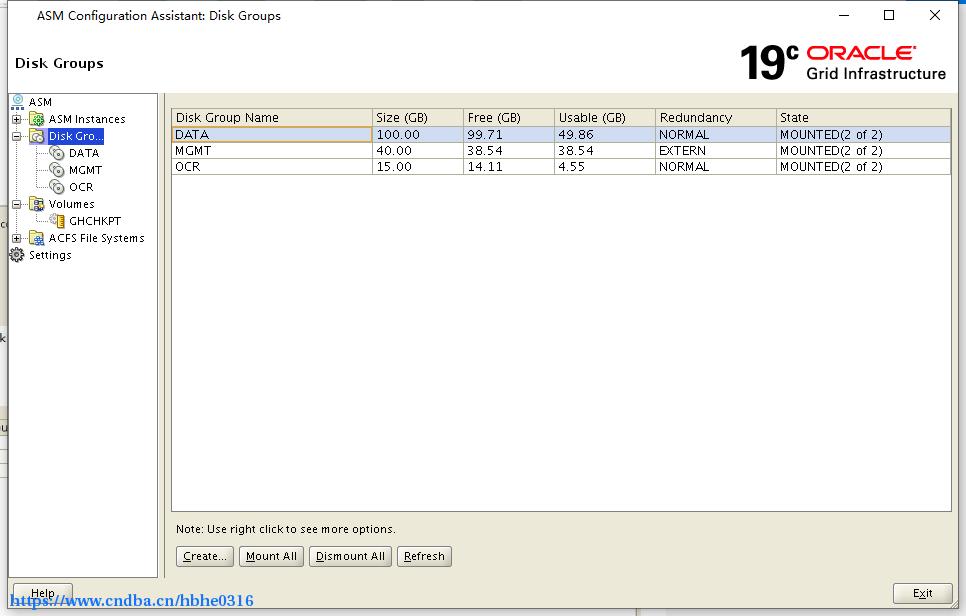

3.配置ASM DATA磁盘组

使用grid用户执行命令

[grid@rac01 ~]$ asmca

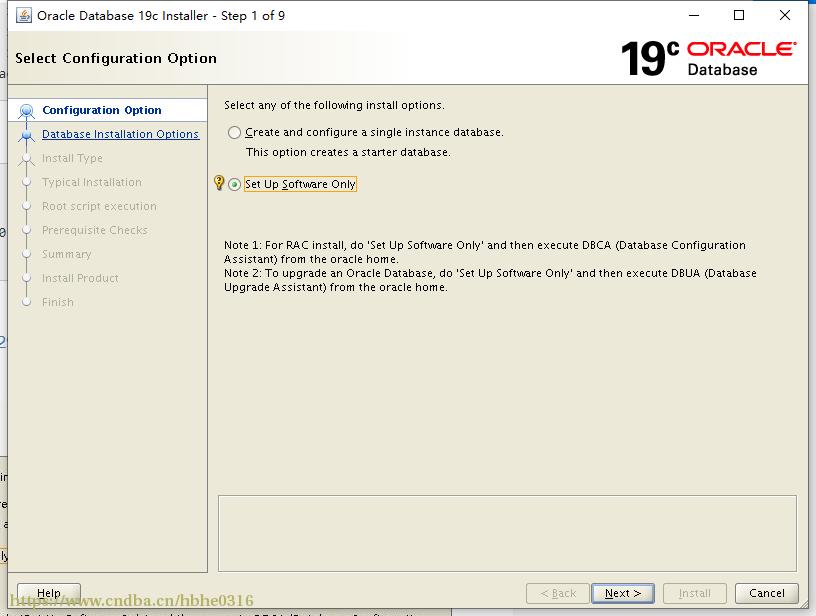

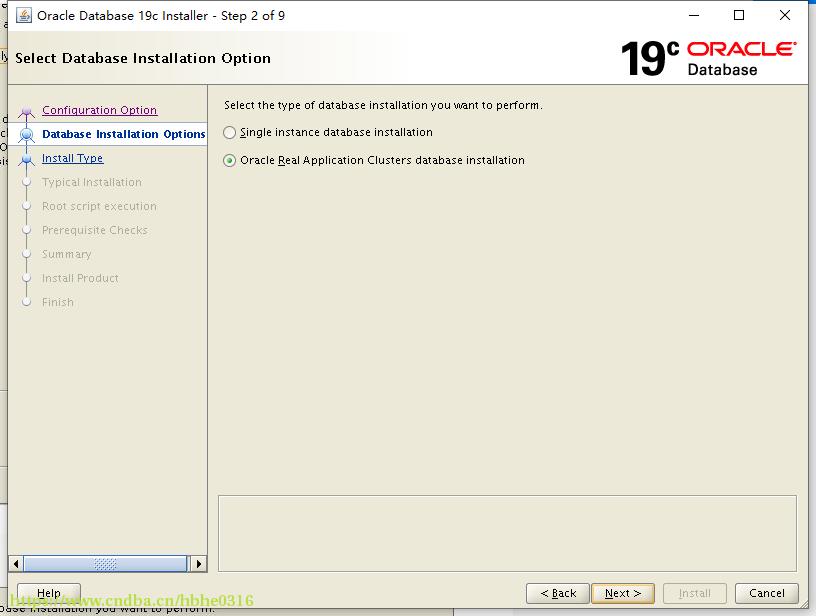

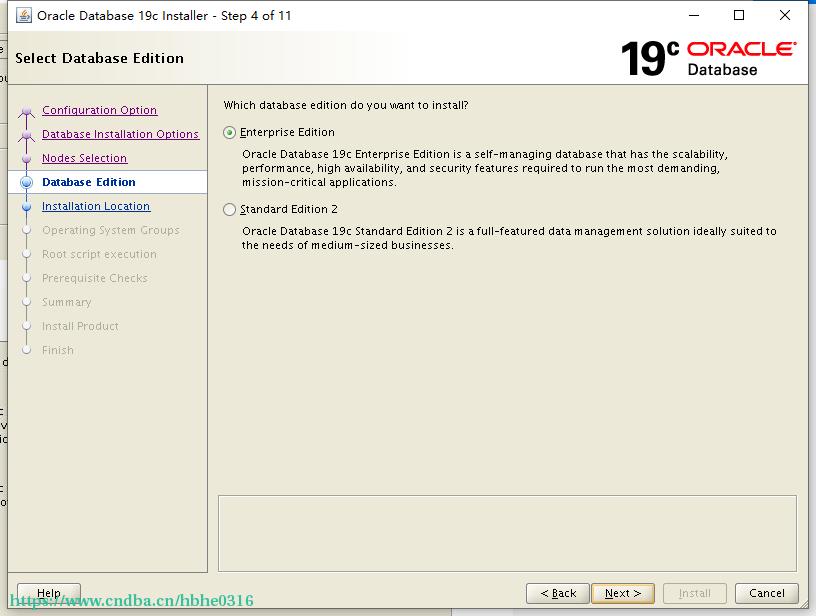

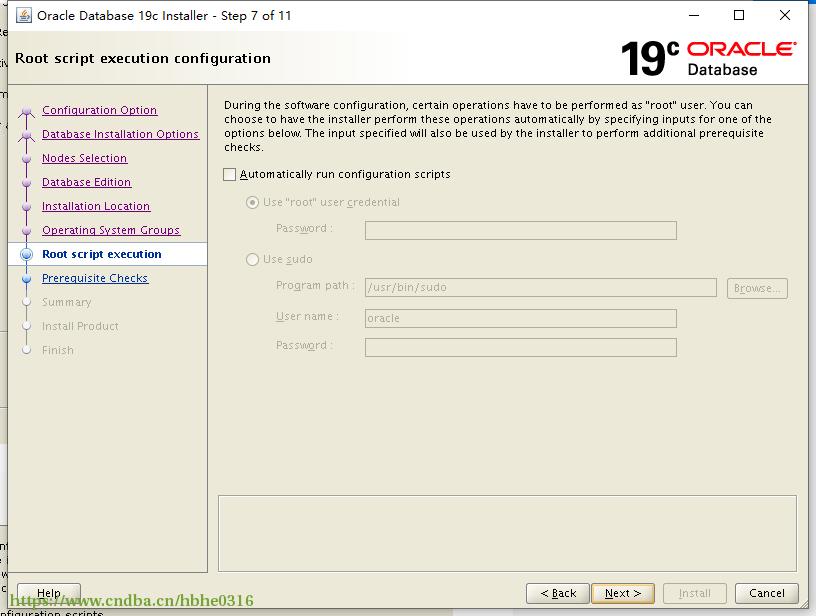

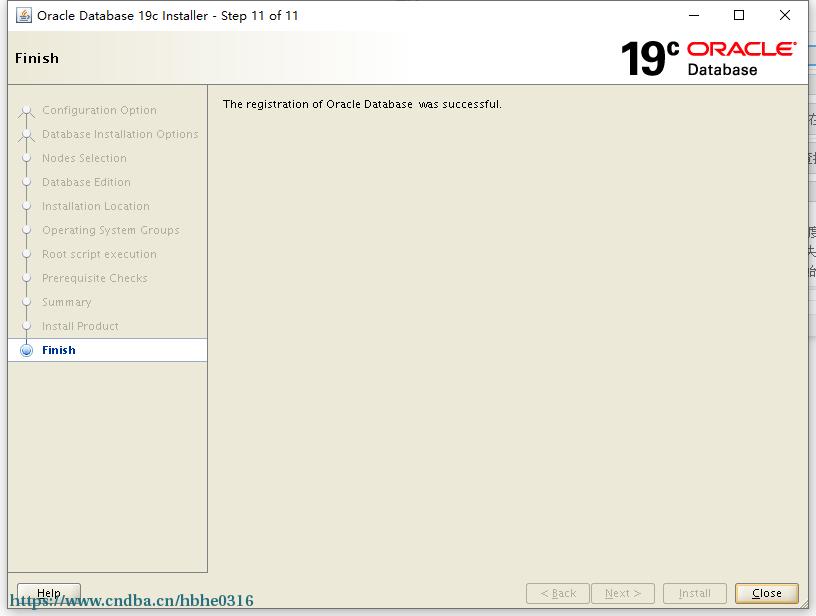

4.安装DB Software

与grid操作相同,用oracle 用户解压缩到ORACLE_HOME。 该操作只需要在节点1上完成解压缩即可。

[oracle@rac01 tmp]$ unzip -d /u01/app/oracle/product/19.3.0/db_1 /tmp/LINUX.X64_193000_db_home.zip

[oracle@rac01 tmp]$ export DISPLAY=192.168.56.1:0.0

[oracle@rac01 tmp]$ cd /u01/app/oracle/product/19.3.0/db_1

[oracle@rac01 db_1]$ ./runInstaller

[root@rac01 ~]# /u01/app/oracle/product/19.3.0/db_1/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/oracle/product/19.3.0/db_1

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

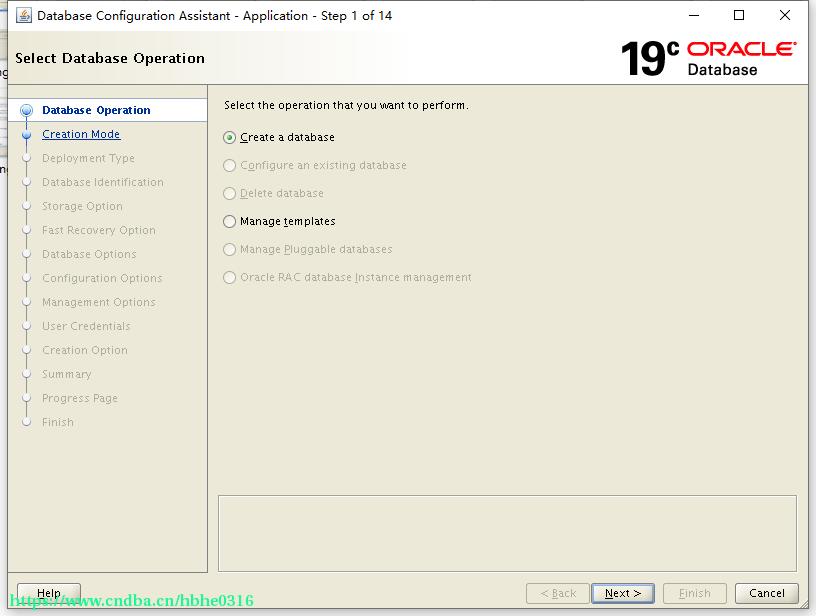

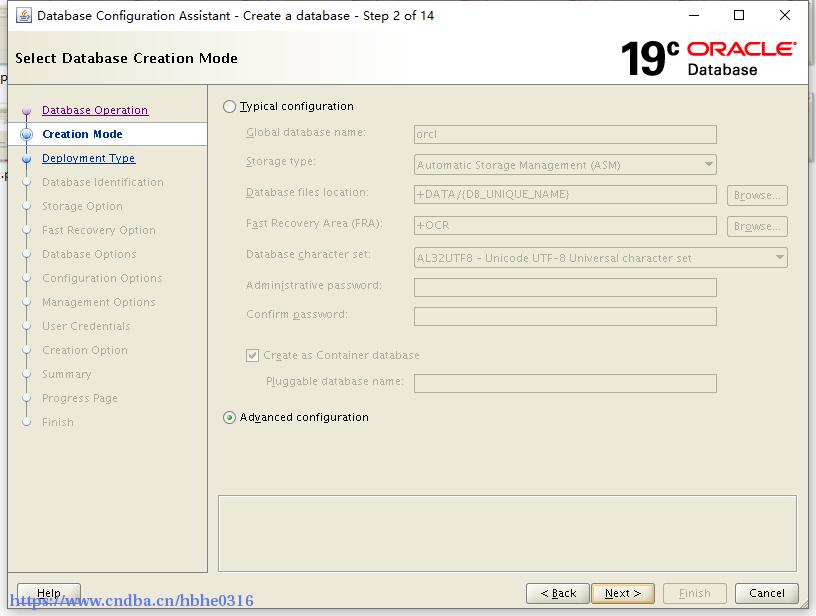

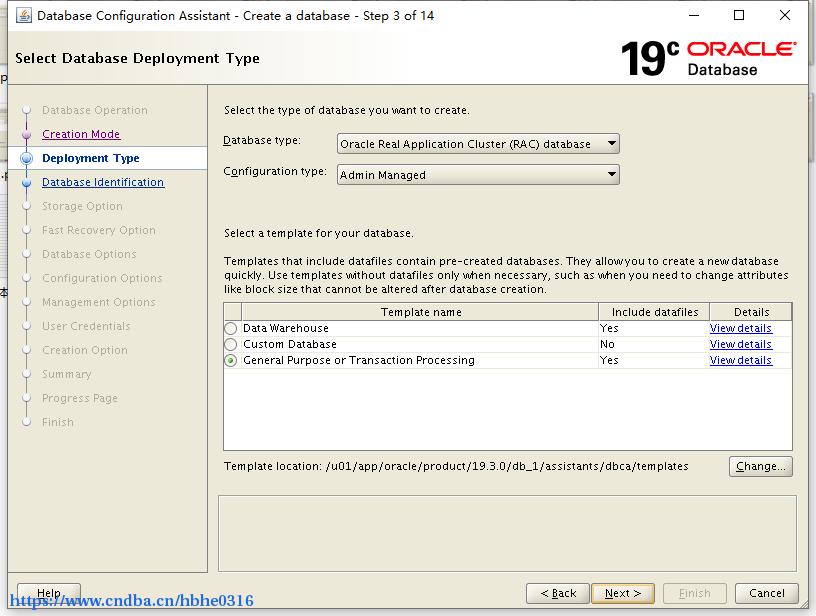

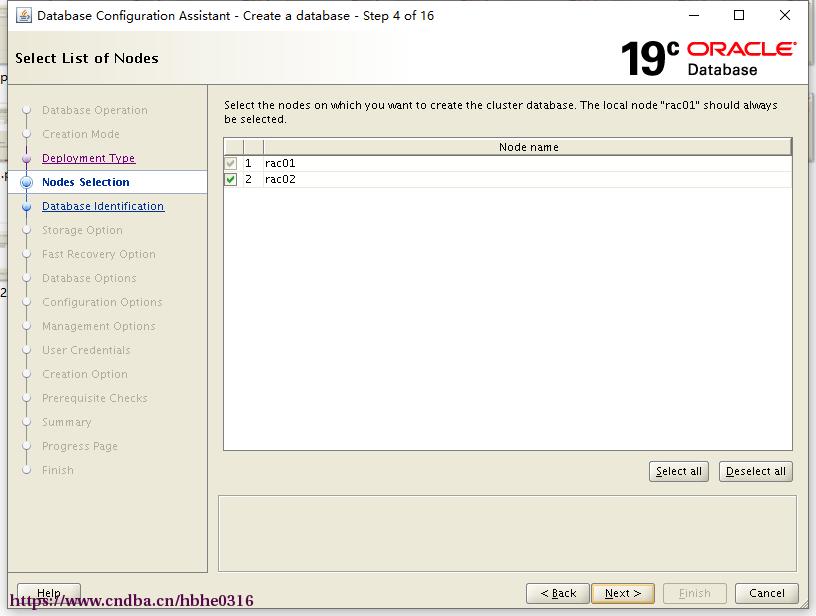

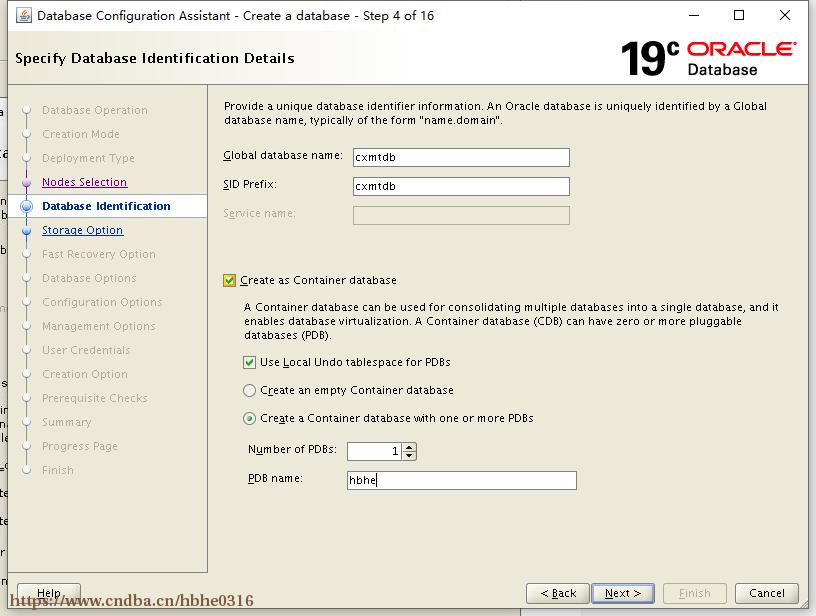

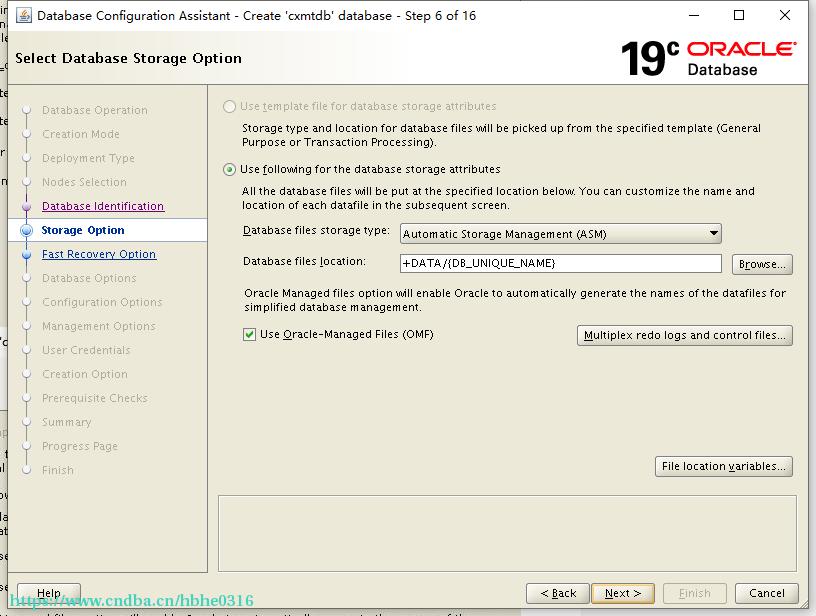

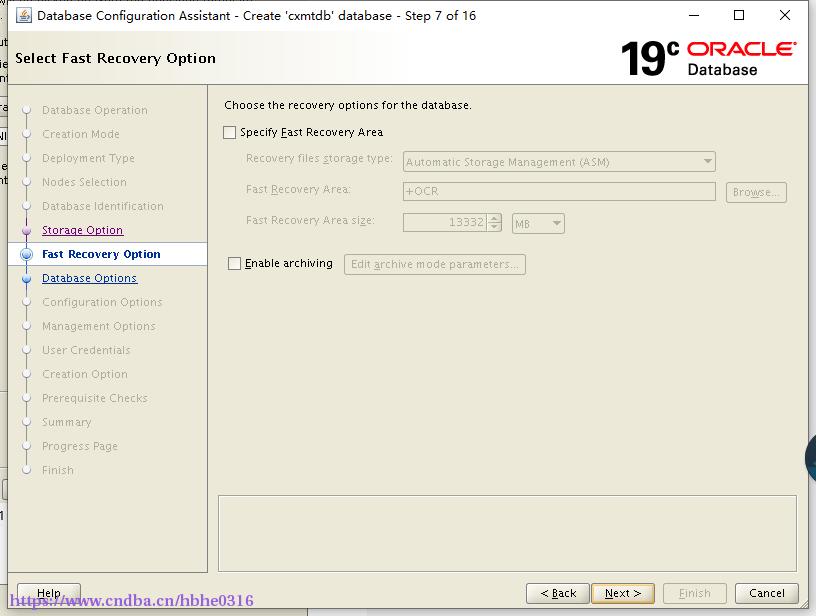

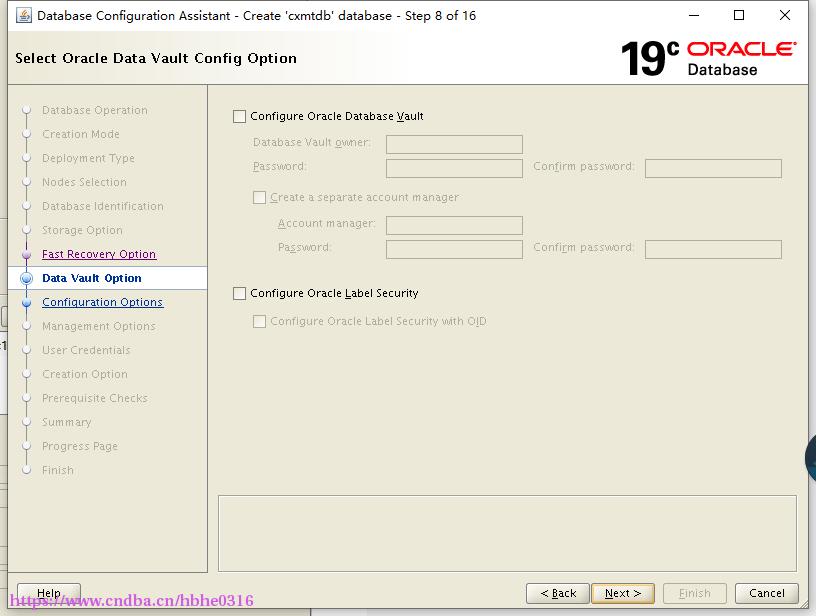

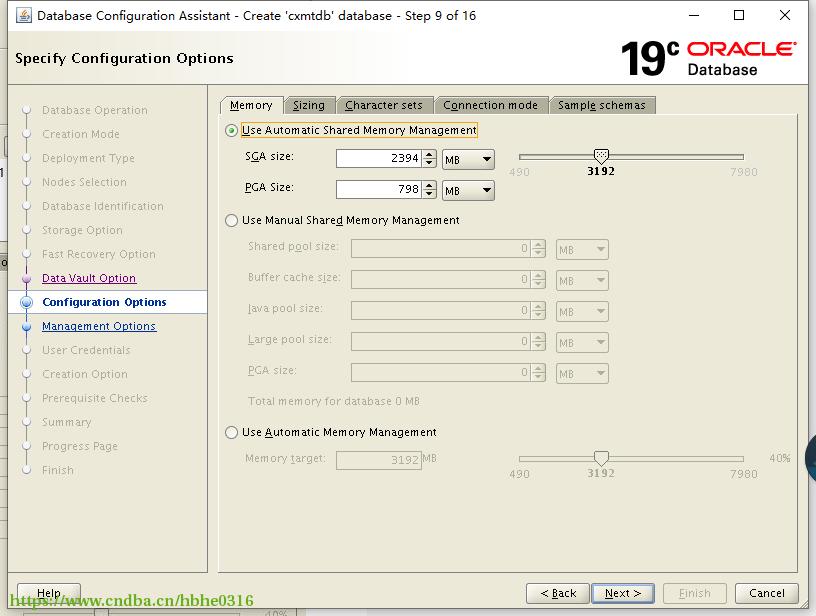

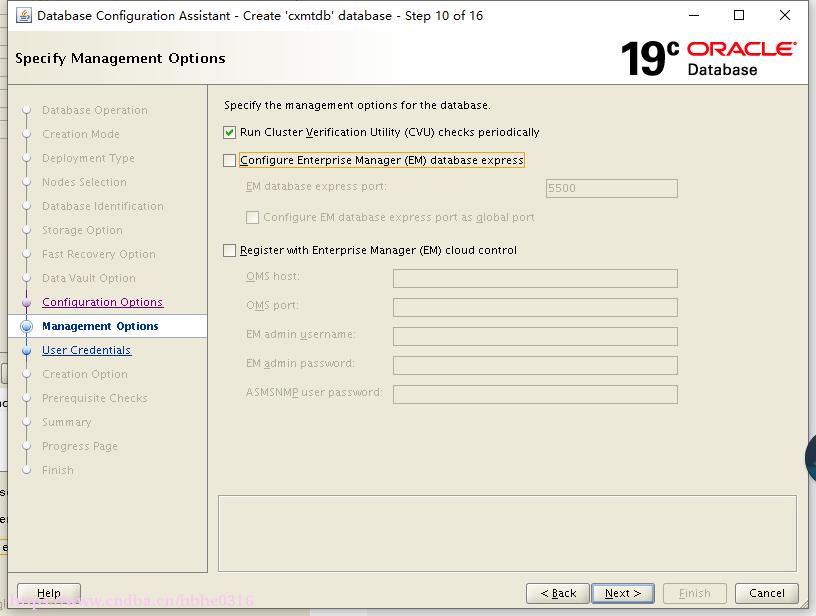

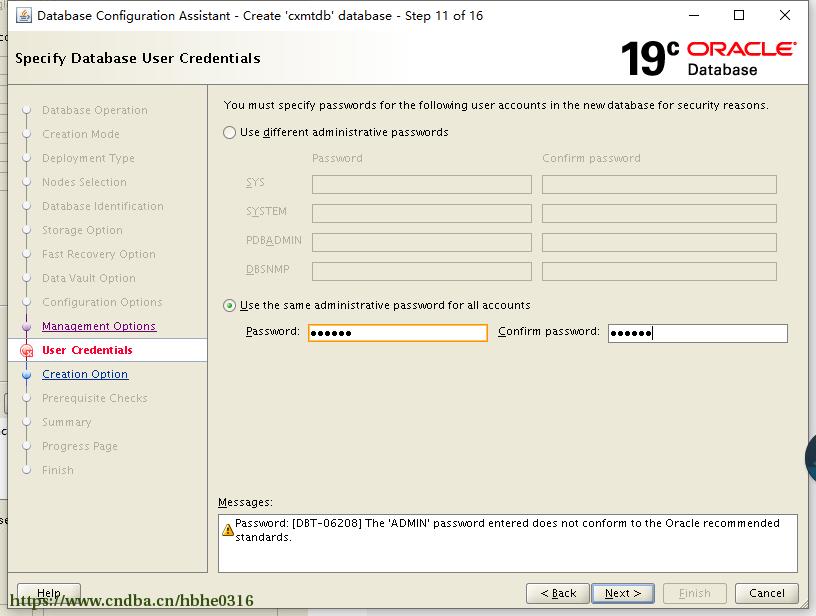

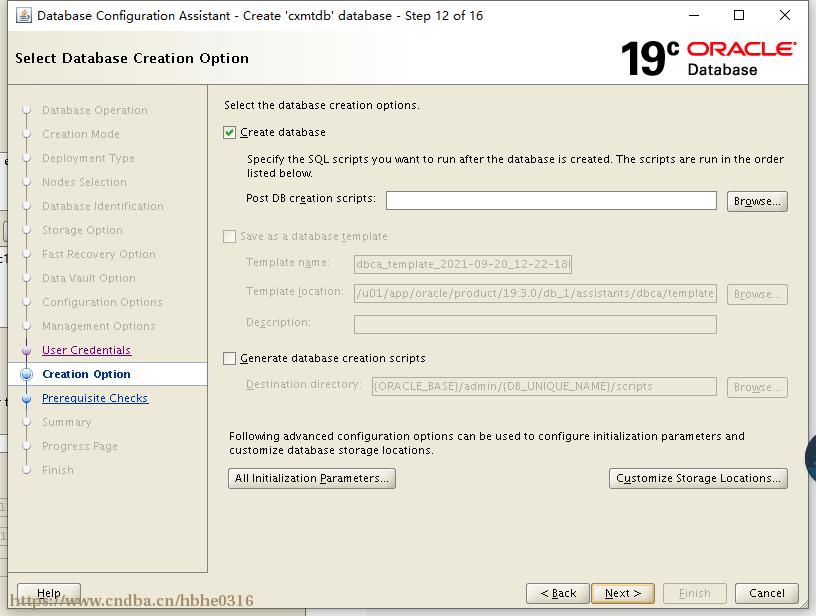

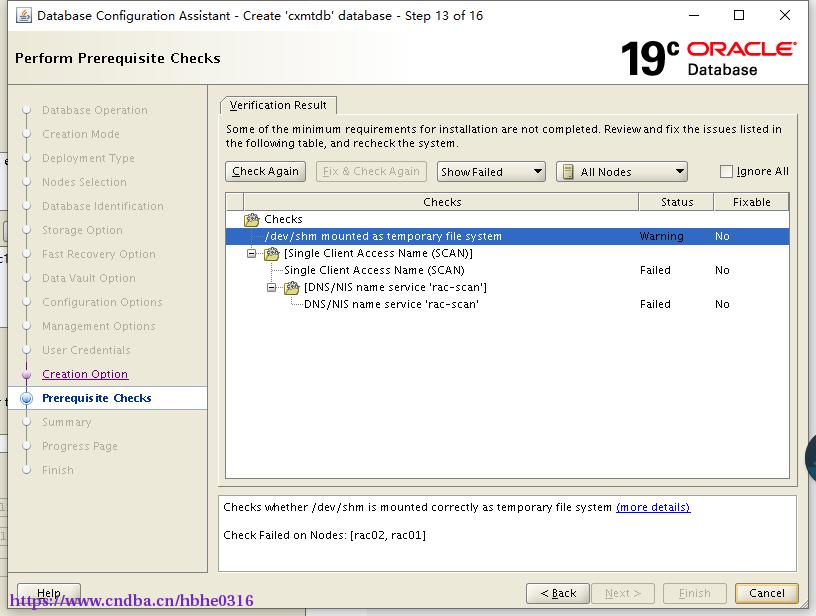

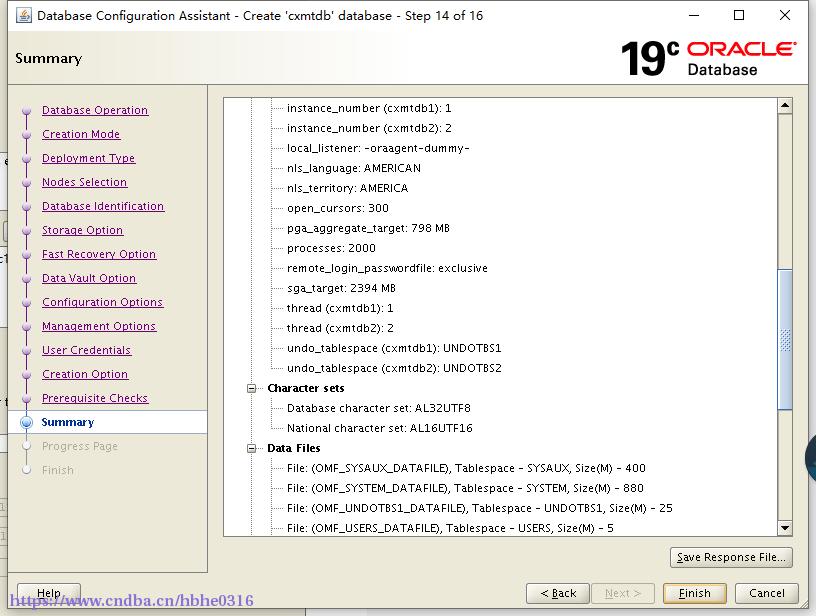

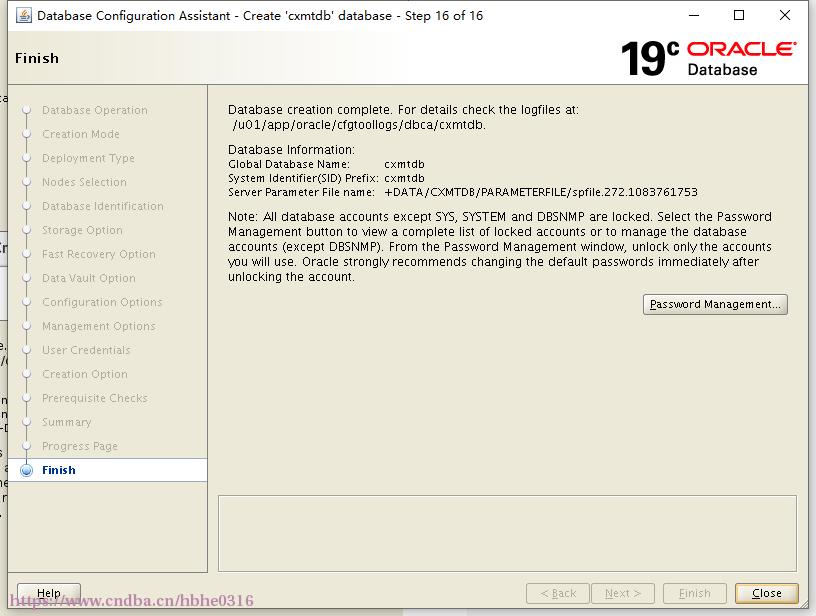

5. DBCA创建数据库

[oracle@rac01 19.3.0]$ dbca

6. 验证RAC状态

[grid@rac01 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE rac01 STABLE

ONLINE ONLINE rac02 STABLE

ora.MGMT.GHCHKPT.advm

OFFLINE OFFLINE rac01 STABLE

OFFLINE OFFLINE rac02 STABLE

ora.chad

ONLINE ONLINE rac01 STABLE

ONLINE ONLINE rac02 STABLE

ora.helper

OFFLINE OFFLINE rac01 IDLE,STABLE

OFFLINE OFFLINE rac02 IDLE,STABLE

ora.mgmt.ghchkpt.acfs

OFFLINE OFFLINE rac01 STABLE

OFFLINE OFFLINE rac02 STABLE

ora.net1.network

ONLINE ONLINE rac01 STABLE

ONLINE ONLINE rac02 STABLE

ora.ons

ONLINE ONLINE rac01 STABLE

ONLINE ONLINE rac02 STABLE

ora.proxy_advm

OFFLINE OFFLINE rac01 STABLE

OFFLINE OFFLINE rac02 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE rac01 STABLE

2 ONLINE ONLINE rac02 STABLE

3 ONLINE OFFLINE STABLE

ora.DATA.dg(ora.asmgroup)

1 ONLINE ONLINE rac01 STABLE

2 ONLINE ONLINE rac02 STABLE

3 ONLINE OFFLINE STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac02 STABLE

ora.MGMT.dg(ora.asmgroup)

1 ONLINE ONLINE rac01 STABLE

2 ONLINE ONLINE rac02 STABLE

3 OFFLINE OFFLINE STABLE

ora.MGMTLSNR

1 OFFLINE OFFLINE STABLE

ora.OCR.dg(ora.asmgroup)

1 ONLINE ONLINE rac01 STABLE

2 ONLINE ONLINE rac02 STABLE

3 OFFLINE OFFLINE STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE rac01 Started,STABLE

2 ONLINE ONLINE rac02 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE rac01 STABLE

2 ONLINE ONLINE rac02 STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE rac02 STABLE

ora.cxmtdb.db

1 ONLINE ONLINE rac01 Open,HOME=/u01/app/o

racle/product/19.3.0

/db_1,STABLE

2 ONLINE ONLINE rac02 Open,HOME=/u01/app/o

racle/product/19.3.0

/db_1,STABLE

ora.qosmserver

1 ONLINE ONLINE rac02 STABLE

ora.rac01.vip

1 ONLINE ONLINE rac01 STABLE

ora.rac02.vip

1 ONLINE ONLINE rac02 STABLE

ora.rhpserver

1 OFFLINE OFFLINE STABLE

ora.scan1.vip

1 ONLINE ONLINE rac02 STABLE

--------------------------------------------------------------------------------

# 7.查看数据库状态及版本

[oracle@rac01 ~]$ sqlplus / as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Mon Sep 20 21:35:02 2021

Version 19.3.0.0.0

Copyright (c) 1982, 2019, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.3.0.0.0

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 HBHE READ WRITE NO

版权声明:本文为博主原创文章,未经博主允许不得转载。

Linux,oracle

- 上一篇:Pcs安装

- 下一篇:Oracle 19.3 RAC 停止和启动